Chapter 4 Two-Way ANOVA

4.1 Situation

In this chapter, we extend the One-Way ANOVA to situations with two factors or categorical explanatory variables in a method that is generally called the Two-Way ANOVA. This allows researchers to simultaneously study two variables that might explain variability in the responses and explore whether the impacts of one explanatory variable change depending on the level of the other explanatory variable. In some situations, each observation is so expensive that researchers want to use a single study to explore two different sets of research questions in the same round of data collection. For example, a company might want to study factors that affect the number of defective products per day and are interested in the impacts of two different types of training programs and three different levels of production quotas. These methods would allow engineers to compare the training programs, production quotas, and see if the training programs “work differently” for different production quotas. In a clinical trials context, it is well known that certain factors can change the performance of certain drugs. For example, different dosages of a drug might have different benefits or side-effects on men, versus women or children or even for different age groups in adults. When the impact of one factor on the response changes depending on the level of another factor, we say that the two explanatory variables interact. It is also possible for both factors to be related to differences in the mean responses and not interact. For example, suppose there is a difference in the response variable means between young and old subjects and a difference in the responses among various dosages, but the effect of increasing the dosage is the same for both young and old subjects. This is an example of what is called an additive type of model. In general, the world is more complicated than the single factor models we considered in Chapter 3 can account for, especially in observational studies, so these models allow us to start to handle more realistic situations.

Consider the following “experiment” where we want to compare the strength of different brands of paper towels when they are wet. The response variable will be the time to failure in seconds (a continuous response variable) when a weight is placed on the towel held at the four corners. We are interested in studying the differences between brands and the impact of different amounts of water applied to the towels.

Predictors (Explanatory Variables): A:

Brand(2 brands of interest, named B1 and B2) and B: Number ofDropsof water (10, 20, 30 drops).Response: Time to failure (in seconds) of a towel (\(y\)) with a weight sitting in the middle of the towel.

4.2 Designing a two-way experiment and visualizing results

Ideally, we want to randomly assign the levels of each factor so that we can attribute causality to any detected effects and to reduce the chances of confounding, where the differences we think are due to one explanatory variable might be due to another variable that varied with the this explanatory variable of interest. Because there are two factors, we would need to design a random assignment scheme to select the levels of both variables. For example, we could randomly select a brand and then randomly select the number of drops to apply from the levels chosen for each measurement. Or we could decide on how many observations we want at each combination of the two factors (ideally having them all equal so the design is balanced) and then randomize the order of applying the different combinations of levels.

Why might it be important to randomly apply the brand and number of drops in an experiment? There are situations where the order of observations can be related to changes in the responses and we want to be able to eliminate the order of observations from being related to the levels of the factors – otherwise the order of observations and levels of the factors would be confounded. For example, suppose that the area where the experiment is being performed becomes wet over time and the later measurements have extra water that gets onto the paper towels and they tend to fail more quickly. If all the observations for the second brand were done later in the study, then the order of observations impacts could make the second brand look worse. If the order of measurements to be made is randomized, then even if there is some drift in the responses over the order of observations it should still be possible to see the differences in the randomly assigned effects. If the study incorporates repeated measurements on human or animal subjects, randomizing the order of treatments they are exposed to can alleviate impacts of them “learning” through the study or changing just due to being studied, something that we would not have to worry about with paper towels.

In observational studies, we do not have the luxury of random assignment, that is, we cannot randomly assign levels of the treatment variables to our subjects, so we cannot guarantee that the only differences between the groups are based on the differences in the explanatory variables. As discussed before, because we can’t control which level of the variables are assigned to the subjects, we cannot make causal inferences and have to worry about other variables being the real drivers of the results. Although we can never establish causal inference with observational studies, we can generalize our results to a larger population if we have a representative (ideally random) sample from our population of interest.

It is also possible that we might have studies where some of the variables are randomly assigned and others that are not randomly assignable. The most common versions of this are what we sometimes call subject “demographics”, such as gender, income, race, etc. We might be performing a study where we can randomly assign treatments to these subjects but might also want to account for differences based on income level, which we can’t assign. In these cases, the scope of inference gets complicated – differences seen on randomized variables can be causally interpreted but you have to be careful to not say that the demographics caused differences. Suppose that a randomly assigned drug dosage is found to show positive differences in older adults and negative changes in younger adults. We could say that the dosage causes the increases in older adults and decreases in younger ones, but we can’t say that age caused the differences in the responses – it just modified how the drug works and what the drug caused to happen in the responses.

Even when we do have random assignment of treatments it is important to think about who/what is included in the sample. To get back to the paper towel example, we are probably interested in more than the sheets of the rolls we have to work with. If we could randomly select the studied paper towels from all paper towels made by each brand, our conclusions could be extended to those populations. That probably would not be practical, but trying to make sure that the towels are representative of all made by each brand by checking for defects and maybe picking towels from a few different rolls would be a good start to being able to extend inferences beyond the tested towels. But if you were doing this study in the factory, it might be possible to randomly sample from the towels produced, at least over the course of a day.

Once random assignment and random sampling is settled, the final aspect of study design involves deciding on the number of observations that should be made. The short (glib) answer is to take as many as you can afford. With more observations comes higher power to detect differences if they exist, which is a desired attribute of all studies. It is also important to make sure that you obtain multiple observations at each combination of the treatment levels, which are called replicates. Having replicate measurements allows estimation of the mean for each combination of the treatment levels as well as estimation and testing for an interaction. And we always prefer80 having balanced designs because they provide resistance to violation of some assumptions as was discussed in Chapter 3. A balanced design in a Two-Way ANOVA setting involves having the same sample size for every combination of the levels of the two factor variables in the model.

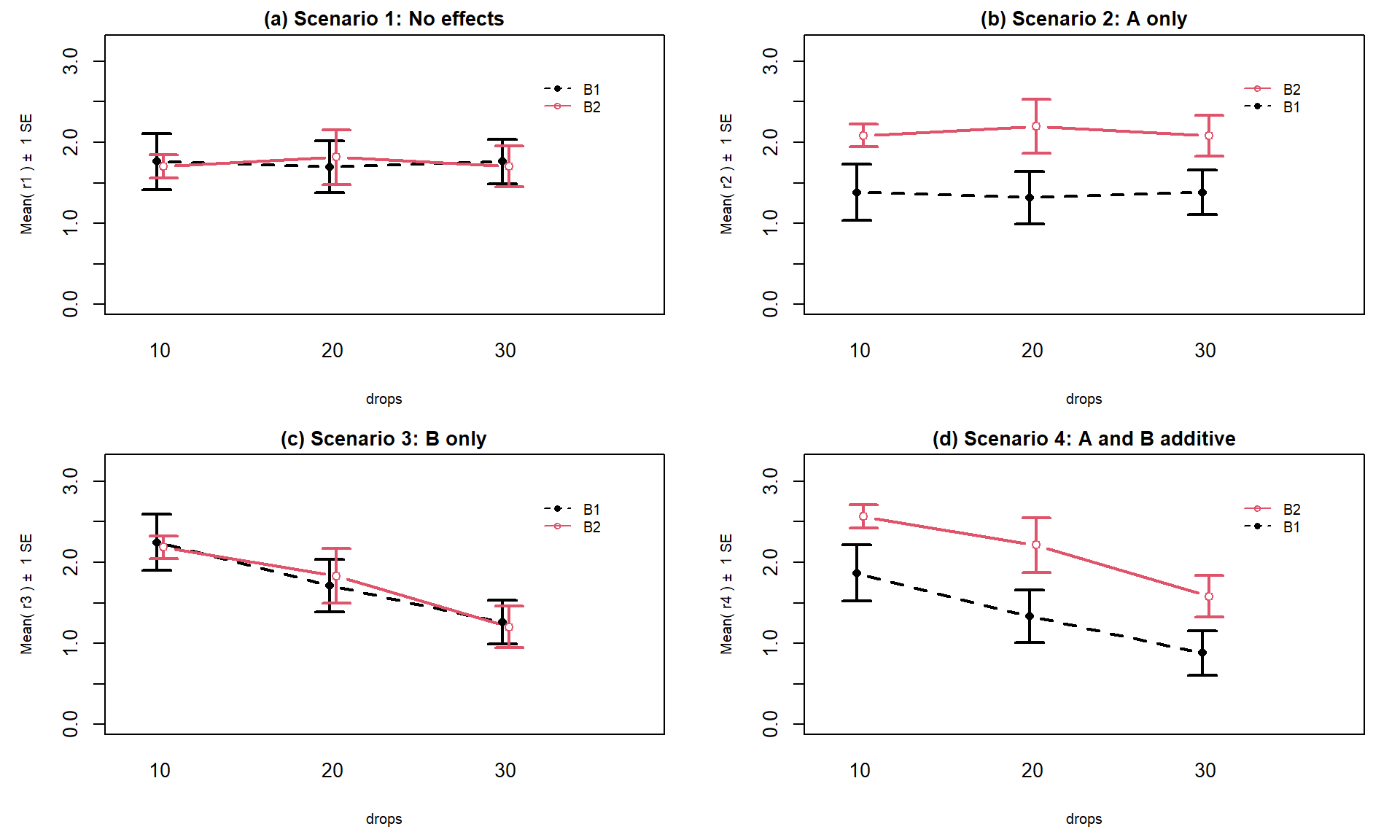

With two categorical explanatory variables, there are now five possible scenarios for the truth. Different situations are created depending on whether there is an interaction between the two variables, whether both variables are important but do not interact, or whether either of the variables matter at all. Basically, there are five different possible outcomes in a randomized Two-Way ANOVA study, listed in order of increasing model complexity:

Neither A or B has an effect on the responses (nothing causes differences in responses).

A has an effect, B does not (only A causes differences in responses).

B has an effect, A does not (only B causes differences in responses).

Both A and B have effects on response but no interaction (A and B both cause differences in responses but the impacts are additive).

Effect of A on response differs based on the levels of B, the opposite is also true (means for levels of response across A are different for different levels of B, or, simply, A and B interact in their effect on the response).

To illustrate these five potential outcomes, we will consider a fake version of the paper towel example. It ended up being really messy and complicated to actually perform the experiment as described so these data were simulated. The hope is to use this simple example to illustrate some of the Two-Way ANOVA possibilities. The first step is to understand what has been observed (number observations at each combination of factors) and look at some summary statistics across all the “groups”. The data set is available via the following link:

library(readr)

pt <- read_csv("http://www.math.montana.edu/courses/s217/documents/pt.csv")

pt <- pt %>% mutate(drops = factor(drops),

brand = factor(brand)

)The data set contains five observations per combination of treatment levels as

provided by the tally function. To get counts for combinations of the

variables, use the general formula of tally(x1 ~ x2, data = ...) – noting that the order of x1 and x2 doesn’t matter here:

library(mosaic)

tally(brand ~ drops, data = pt)## drops

## brand 10 20 30

## B1 5 5 5

## B2 5 5 5The sample sizes in each of the six treatment level combinations of Brand

and Drops [(B1, 10), (B1, 20), (B1, 30), (B2, 10), (B2, 20),

(B2, 30)] are \(n_{jk} = 5\) for \(j^{th}\) level of Brand (\(j = 1, 2\)) and

\(k^{th}\) level of Drops (\(k = 1, 2, 3\)). The tally function gives us an \(R\) by \(C\)

contingency table with \(R = 2\) rows (B1, B2) and \(C = 3\) columns

(10, 20, and 30).

We’ll have more fun with \(R\) by \(C\)

tables in Chapter 5 – here it helps us to see the sample size in

each combination of factor levels. The favstats function also helps us

dig into the results for all combinations of factor levels. The notation

involves putting both factor variables after the “~” with a “+” between them.

In the output, the first row contains summary information for the

5 observations for Brand B1 and Drops amount 10. It also contains the

sample size in the n column, although here it rolled into a new set of

rows with the standard deviations of each combination.

favstats(responses ~ brand + drops, data = pt)## brand.drops min Q1 median Q3 max mean sd n missing

## 1 B1.10 0.3892621 1.3158737 1.906436 2.050363 2.333138 1.599015 0.7714970 5 0

## 2 B2.10 2.3078095 2.8556961 3.001147 3.043846 3.050417 2.851783 0.3140764 5 0

## 3 B1.20 0.3838299 0.7737965 1.516424 1.808725 2.105380 1.317631 0.7191978 5 0

## 4 B2.20 1.1415868 1.9382142 2.066681 2.838412 3.001200 2.197219 0.7509989 5 0

## 5 B1.30 0.2387500 0.9804284 1.226804 1.555707 1.829617 1.166261 0.6103657 5 0

## 6 B2.30 0.5470565 1.1205102 1.284117 1.511692 2.106356 1.313946 0.5686485 5 0The next step is to visually explore the results across the combinations

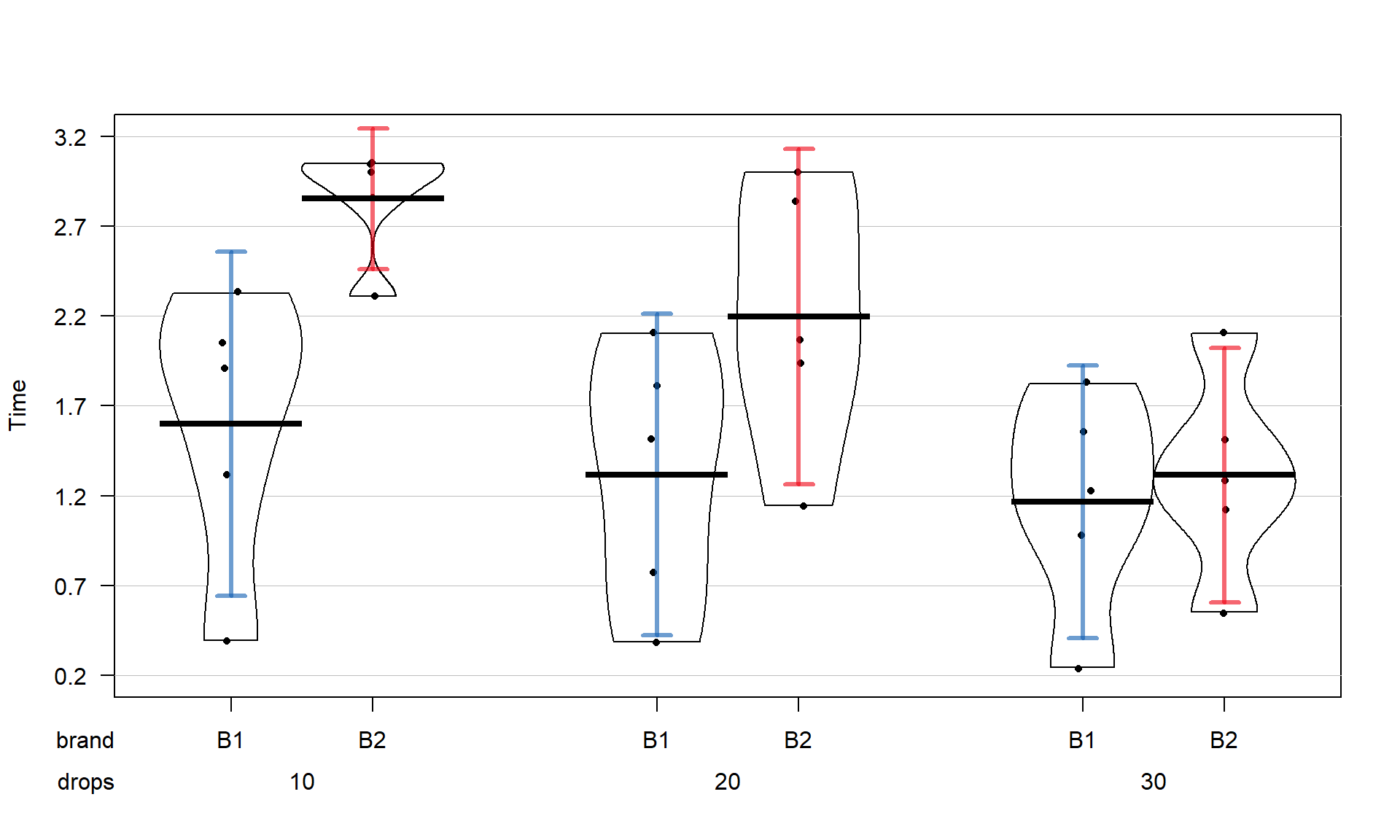

of the two explanatory variables. The pirate-plot can be extended to handle these

sorts of two-way situations using a formula that is something like y ~ A * B.

The x-axis in the pirate-plot shows two rows of labels based on the two categories and the unique combinations of those categories are directly related to a displayed distribution of responses and mean and confidence interval. For example, in Figure 4.1, the Brand with levels of B1 and B2 is the first row of x-axis labels and they are repeated across the three levels of Drops. In reading these plots, look for differences in the means across the levels of the first row variable (Brand) for each level of the second row variable (Drops) and then focus on whether those differences change across the levels of the second variable – that is an interaction as the differences in differences change.

Specifically, start with comparing the two brands at each amount of water.

Do the brands seem different? Certainly for 10 drops of water the two look

different but not for 30 drops, suggesting a different impact of brands based on the amount of water present. We can also look for combinations of factors

that produce the highest or lowest responses in this display. It appears that

the time to failure is highest in the low water drop groups but as the water

levels increase, the time to failure falls and the differences in the two

brands seem to decrease. The fake data seem to have relatively similar

amounts of variability and distribution shapes except for 10 drops and brand B2 – remembering that there are

only 5 observations available for describing the shape of responses for each

combination. These data were simulated using a normal distribution with

constant variance if that gives you some extra confidence in assessing these

model assumptions.

library(yarrr)

set.seed(12)

pirateplot(responses ~ brand * drops, data = pt, xlab = "Drops", ylab = "Time",

inf.method = "ci", inf.disp = "line", theme = 2, point.o = 1)

Figure 4.1: Pirate-plot of paper towel data by Brand (first row of \(x\)-axis) and Drops (second row of \(x\)-axis).

The pirate-plots can handle situations where both variables have more than two

levels but it can sometimes get a bit cluttered to actually display the data when our analysis is going to focus on means of the responses. The means for each combination

of levels that you can find in the favstats output are more usefully used

in what is called an interaction plot.

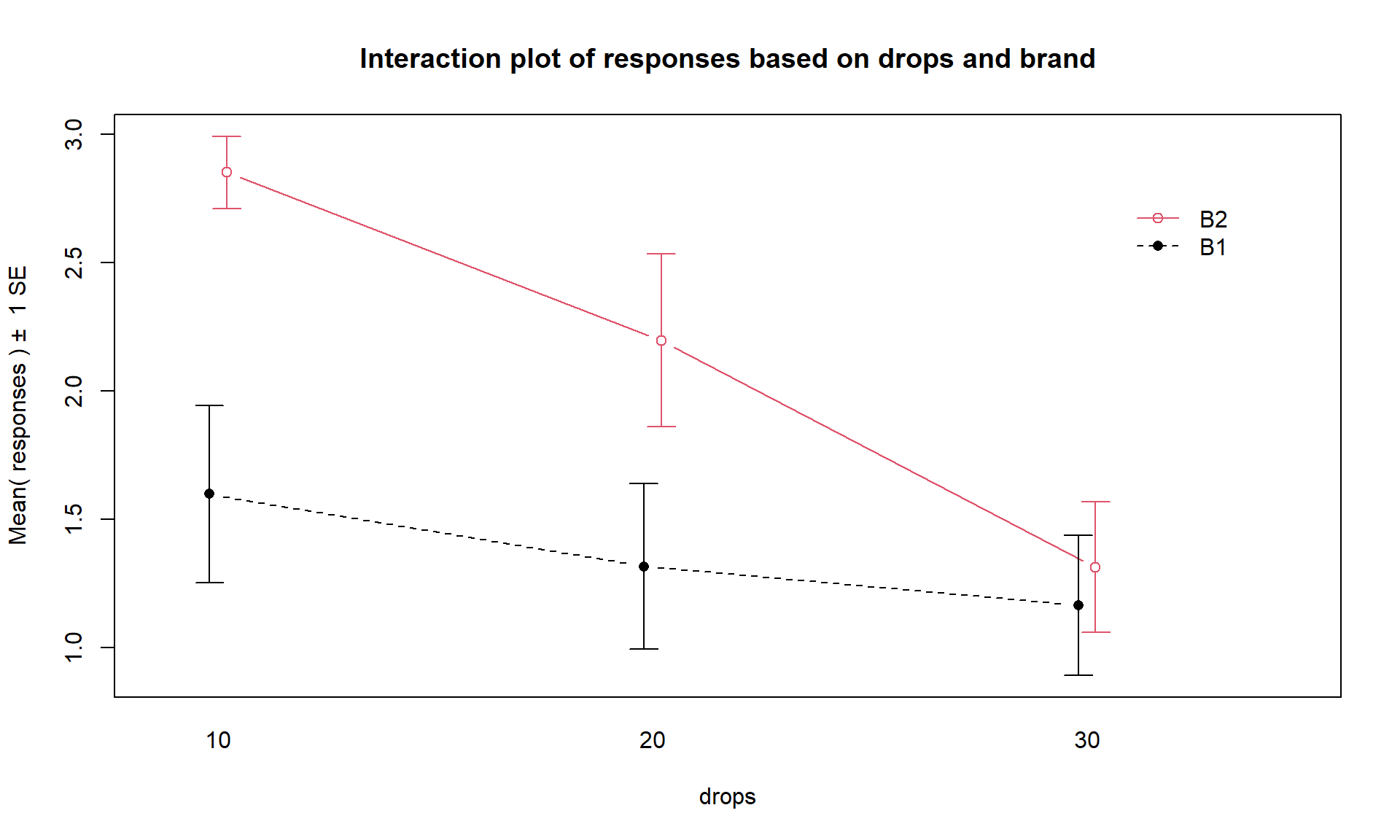

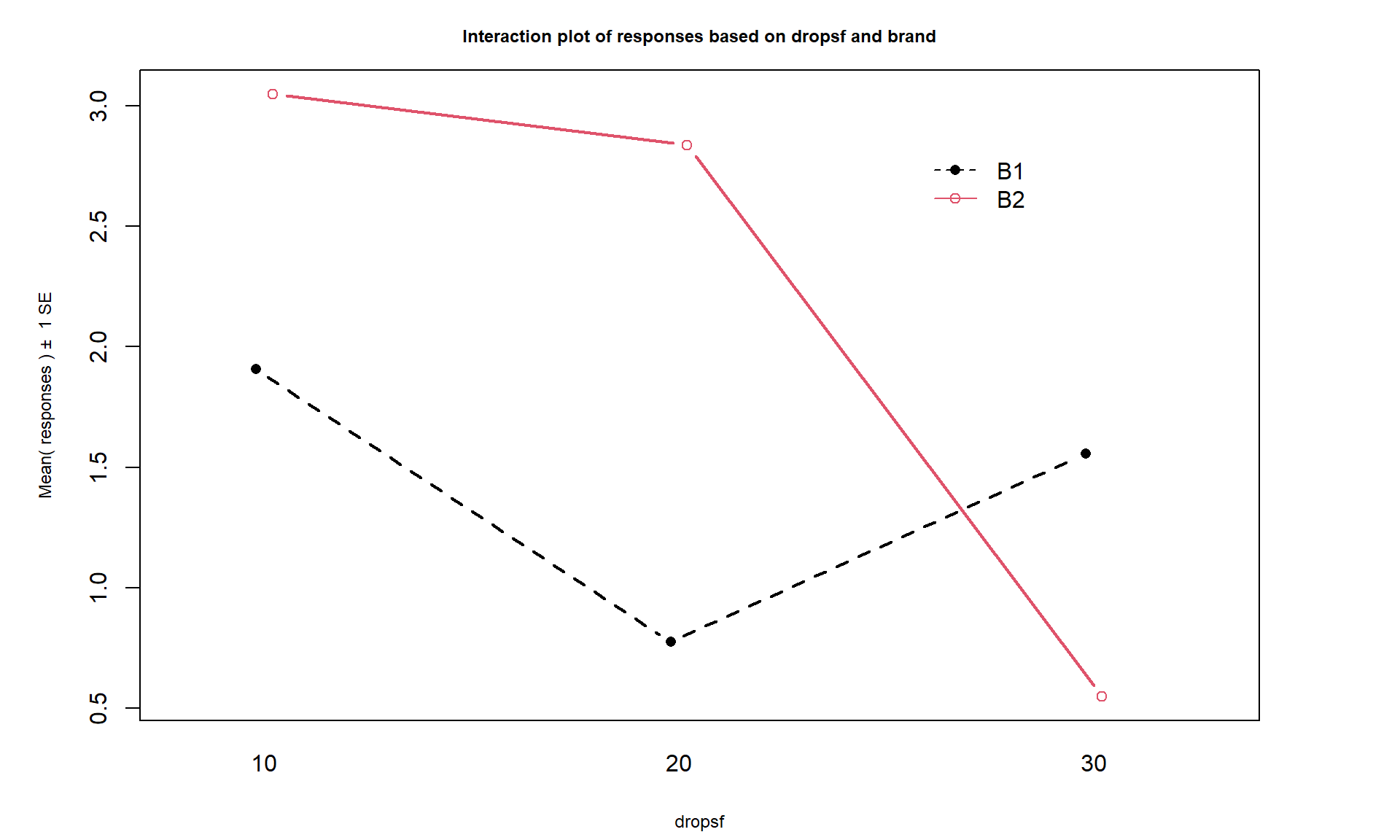

Interaction plots display the mean

responses (y-axis) versus levels of one predictor variable on the x-axis,

adding points and separate lines for each level of the other predictor variable. Because

we don’t like any of the available functions in R, we wrote our own function. It is available two ways. The easiest, if it works, is to install and load the catstats R package (M. Greenwood, Hancock, and Carnegie 2022). If you are working on a local RStudio installation, the first step involves installing the remotes (Csárdi et al. 2021) R package and then loading it – this will allow you to install catstats from our github81 repository (you can type “3” during the installation to avoid updating other packages when you do this step).

# To install the catstats R package (just the first time!):

library(remotes)

remotes::install_github("greenwood-stat/catstats")After that step, you can load catstats like any other R package, using

library(catstats). Some users have experienced issues with getting this

package installed, so you can also download the needed intplot and

intplotarray functions82 using:

source("http://www.math.montana.edu/courses/s217/documents/intplotfunctions_v3.R")The intplot function allows a formula interface like Y ~ X1 * X2 and provides the means \(\pm\) 1 SE (vertical bars) and adds a legend to help make

everything clear.

intplot(responses ~ brand * drops, data = pt)

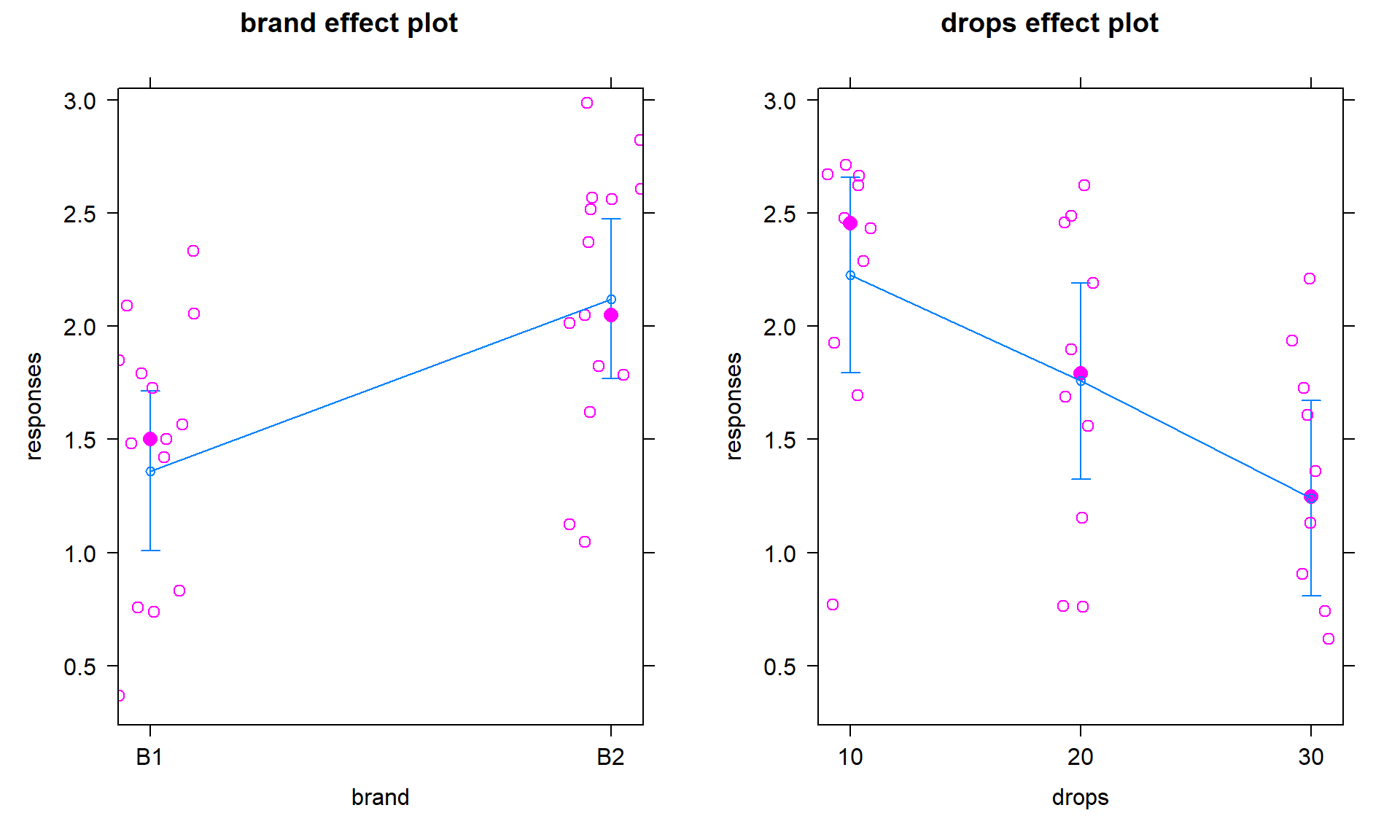

Figure 4.2: Interaction plot of the paper towel data with Drops on the x-axis and different lines based on Brand.

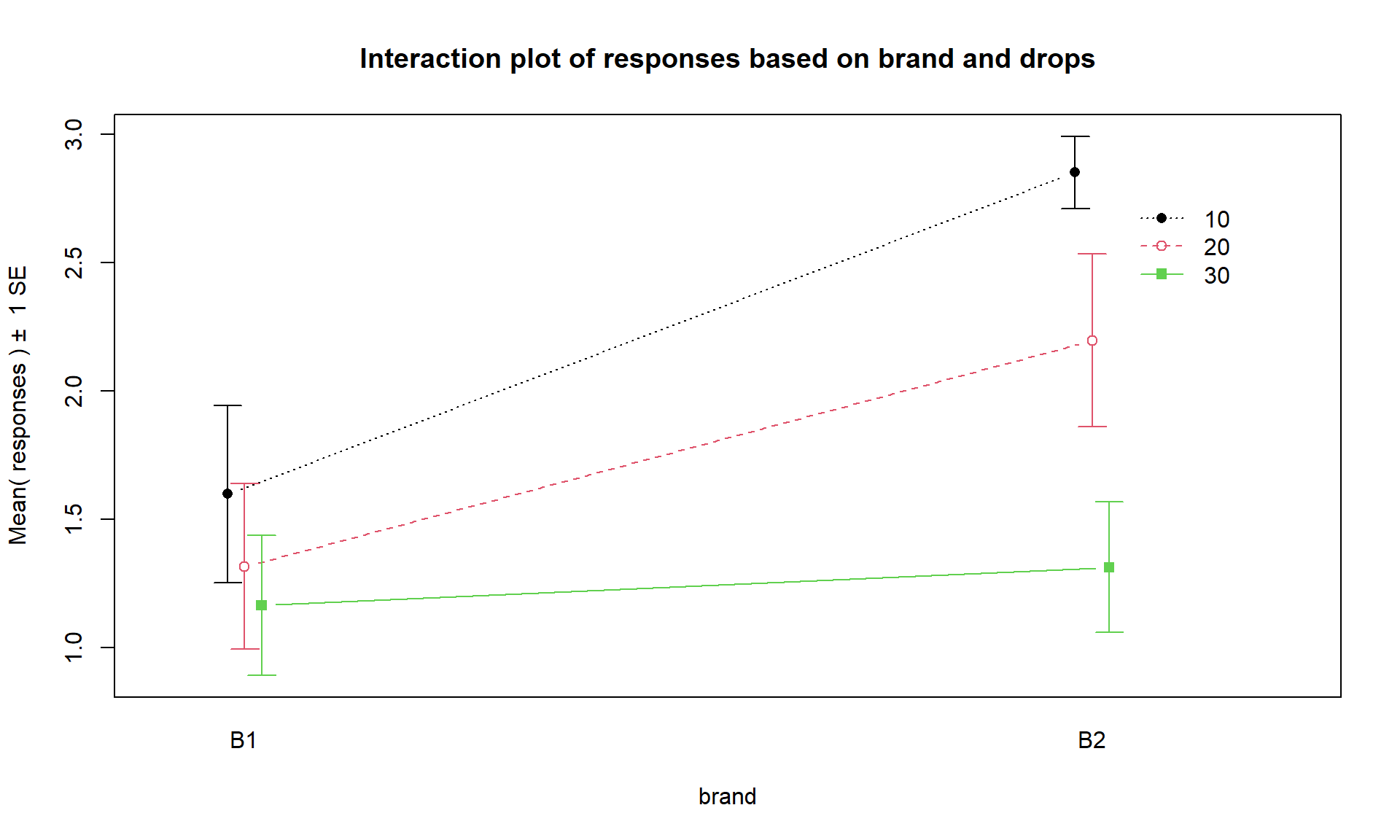

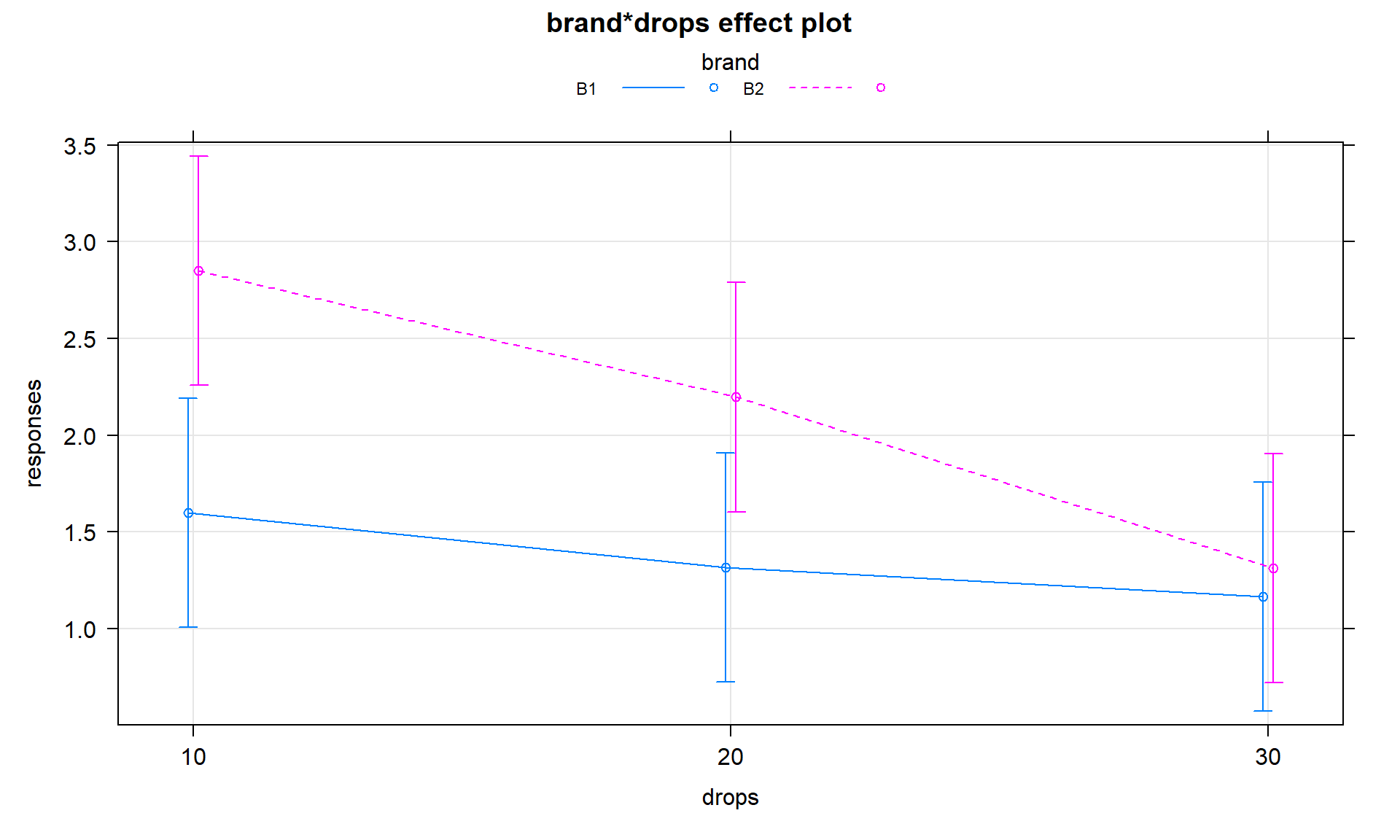

Interaction plots can always be made two different ways by switching the order

of the variables. Figure 4.2 contains Drops on the x-axis

and Figure 4.3 has Brand on the x-axis. Typically putting

the variable with more levels on the x-axis will make interpretation easier,

but not always. Try both and decide on the one that you like best.

intplot(responses ~ drops * brand, data = pt)

Figure 4.3: Interaction plot of paper towel data with Brand on the x-axis and lines based on Drops.

The formula in this function builds on our previous notation and now we include

both predictor variables with an “*” between them. Using an asterisk between

explanatory variables is one way of telling R to include an interaction between

the variables. While the interaction may or may not be present, the interaction

plot helps us to explore those potential differences.

There are a variety of aspects of the interaction plots to pay attention to. Initially, the question to answer is whether it appears that there is an interaction between the predictor variables. When there is an interaction, you will see non-parallel lines in the interaction plot. You want to look from left to right in the plot and assess whether the lines connecting the means are close to parallel, relative to the amount of variability in the estimated means as represented by the SEs in the bars. If it seems that there is clear visual evidence of non-parallel lines, then the interaction is likely worth considering (we will use a hypothesis test to formally assess this – see the discussion below). If the lines look to be close to parallel, then there probably isn’t an interaction between the variables. Without an interaction present, that means that the differences in the response across levels of one variable doesn’t change based on the levels of the other variable and vice-versa. This means that we can consider the main effects of each variable on their own83. Main effects are much like the results we found in Chapter 3 where we can compare means across levels of a single variable except that there are results for two variables to extract from the model. With the presence of an interaction, it is complicated to summarize how each variable is affecting the response variable because their impacts change depending on the level of the other factor. And plots like the interaction plot provide us with useful information on the pattern of those changes.

If the lines are not parallel, then

focus in on comparing the levels of one variable as the other variable changes.

Remember that the definition of an interaction is that the differences among

levels of one variable depends on the level of the other variable being

considered. “Visually” this means comparing the size of the differences in the

lines from left to right. In Figures 4.2 and 4.3,

the effect of amount of water

changes based on the brand being considered. In Figure 4.3,

the three lines

represent the three water levels. The difference between the brands (left to

right, B1 to B2) is different depending on how much water was present. It

appears that Brand B2 lasted longer at the lower water levels but that the

difference between the two brands dropped as the water levels increased. The

same story appears in Figure 4.2. As the

water levels increase (left to right, 10 to 20 to 30 drops), the differences

between the two brands decrease. Of the two versions, Figure 4.2

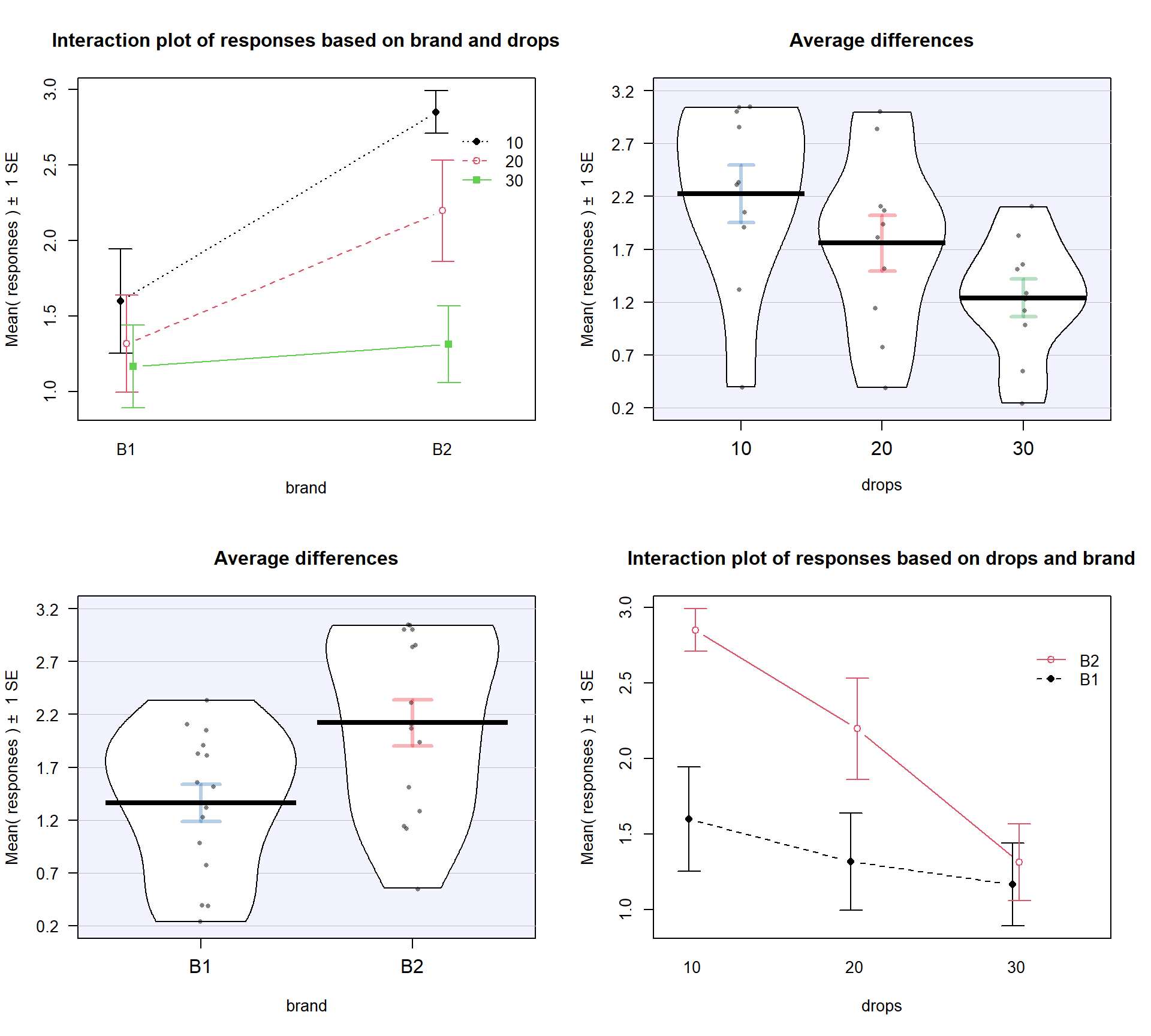

is probably easier to read here. Sometimes it is nice to see the interaction plot made both ways simultaneously, so you can also use the intplotarray function, which provides Figure 4.4. This plot also adds pirate-plots to the off-diagonals so you can explore the main effects of each variable, if that is reasonable.

The interaction plots can be used to

identify the best and worst mean responses for combinations of the treatment

levels. For example, 10 Drops and Brand B2 lasts longest, on average, and 30 Drops with Brand B1 fails fastest, on average. In any version of the plot here, the lines do not appear to be parallel suggesting that further exploration of the

interaction appears to be warranted.

intplotarray(responses ~ drops * brand, data = pt)

Figure 4.4: Interaction plot array of paper towel data with two different versions of interaction plots and pirate-plots of the responses versus each explanatory variable.

Before we get to the hypothesis tests

to formally make this assessment (you knew some sort of p-value was coming, right?),

we can visualize the 5 different scenarios that could characterize the sorts of

results you could observe in a Two-Way ANOVA situation. Figure 4.5

shows 4 of the 5 scenarios. In panel (a), when there are no differences from either

variable (Scenario 1), it provides relatively parallel lines and basically no

differences either across Drops levels (x-axis) or Brand (lines). Data such as these would

likely result in little to no evidence related to a difference in brands, water

levels, or any interaction between them in this data set.

Figure 4.5: Interaction plots of four possible scenarios in the paper towel study.

Scenario 2 (Figure 4.5 panel (b))

incorporates differences based on factor A (here that is Brand) but no real

difference based on the Drops or any interaction. This results in a clear shift between the lines for the means of the Brands but little to no changes in the level of those lines across water levels.

These lines are relatively parallel. We can see that Brand B2 is better than

Brand B1 but that is all we can show with these sorts of results.

Scenario 3 (Figure 4.5 panel (c)) flips the important variable

to B (Drops)

and shows decreasing average times as the water levels increase. Again, the

interaction panels show near parallel-ness in the lines and really just show differences

among the levels of the water. In both Scenarios 2 and 3, we could use a single

variable and drop the other from the model, getting back to a One-Way ANOVA

model, without losing any important information.

Scenario 4 (Figure 4.5 panel (d)) incorporates

effects of A and B, but they are additive. That means that the effect

of one variable is the same across the levels of the other variable. In this

experiment, that would mean that Drops has the same impact on performance

regardless of brand and that the brands differ but each type of difference is

the same regardless of levels of the other variable.

The interaction plot lines are more or less parallel but now the brands are

clearly different from each other. The plot shows the decrease in performance

based on increasing water levels and that Brand B2 is better than

Brand B1. Additive effects show the same difference in lines from left to

right in the interaction plots.

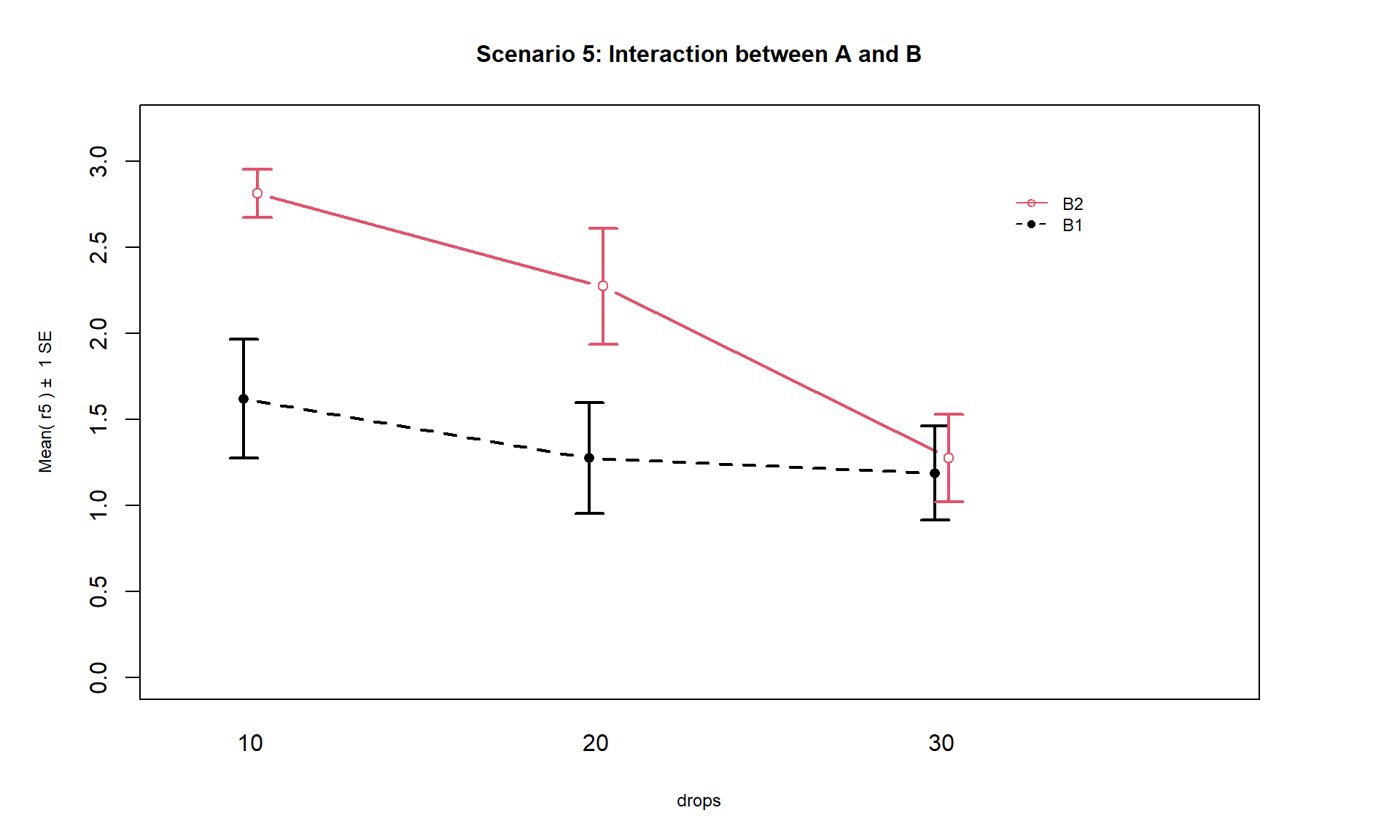

Finally, Scenario 5 (Figure 4.6) involves

an interaction between the two variables (Drops and Brand). There are many ways

that interactions can present but the main thing is to look for clearly

non-parallel lines.

As noted in the previous discussion, the Drops effect

appears to change depending on which level of Brand is being considered.

Note that the plot here described as Scenario 5 is the same as the initial plot

of the results in Figure 4.2.

Figure 4.6: Interaction plot of Scenario 5 where it appears that an interaction is present.

The typical modeling protocol is to start with assuming that Scenario 5 is a possible description of the results, related to fitting what is called the interaction model, and then attempt to simplify the model (to the additive model) if warranted. We need a hypothesis test to help decide if the interaction is “real”. We start with assuming there is no interaction between the two factors in their impacts on the response and assess evidence against that null hypothesis. We need a hypothesis test because the lines will never be exactly parallel in real data and, just like in the One-Way ANOVA situation, the amount of variation around the lines impacts the ability of the model to detect differences, in this case of an interaction.

4.3 Two-Way ANOVA models and hypothesis tests

To assess interactions with two variables, we need to fully describe models for the additive and interaction scenarios and then develop a method for assessing evidence of the need for different aspects of the models. First, we need to define the notation for these models:

\(y_{ijk}\) is the \(i^{th}\) response from the group for level \(j\) of factor A and level \(k\) of factor B

\(j = 1,\ldots,J\) \(J\) is the number of levels of A

\(k = 1,\ldots,K\) \(K\) is the number of levels of B

\(i = 1,\ldots,n_{jk}\) \(n_{jk}\) is the sample size for level \(j\) of factor A and level \(k\) of factor B

\(N = \Sigma_j\Sigma_k n_{jk}\) is the total sample size (sum of the number of observations across all \(JK\) groups)

We need to extend our previous discussion of reference-coded models to develop a Two-Way ANOVA model. We start with the Two-Way ANOVA interaction model:

\[y_{ijk} = \alpha + \tau_j + \gamma_k + \omega_{jk} + \varepsilon_{ijk},\]

where \(\alpha\) is the baseline group mean (for level 1 of A and level 1 of B), \(\tau_j\) is the deviation for the main effect of A from the baseline for levels \(2,\ldots,J\), \(\gamma_k\) (gamma \(k\)) is the deviation for the main effect of B from the baseline for levels \(2,\ldots,K\), and \(\omega_{jk}\) (omega \(jk\)) is the adjustment for the interaction effect for level \(j\) of factor A and level \(k\) of factor B for \(j = 1,\ldots,J\) and \(k = 1,\ldots,K\). In this model, \(\tau_1\), \(\gamma_1\), and \(\omega_{11}\) are all fixed at 0 because \(\alpha\) is the mean for the combination of the baseline levels of both variables and so no adjustments are needed. Additionally, any \(\omega_{jk}\)’s that contain the baseline category of either factor A or B are also set to 0 and the model for these levels just involves \(\tau_j\) or \(\gamma_k\) added to the intercept. Exploring the R output will help clarify which coefficients are present or set to 0 (so not displayed) in these models. As in Chapter 3, R will typically choose the baseline categories alphabetically but now it is choosing a baseline for both variables and so our detective work will be doubled to sort this out.

If the interaction term is not important, usually based on the interaction test presented below, the \(\omega_{jk}\text{'s}\) can be dropped from the model and we get a model that corresponds to Scenario 4 above. Scenario 4 is where there are two main effects in the model but no interaction between them. The additive Two-Way model is

\[y_{ijk} = \alpha + \tau_j + \gamma_k + \varepsilon_{ijk},\]

where each component is defined as in the interaction model. The difference between the interaction and additive models is setting all the \(\omega_{jk}\text{'s}\) to 0 that are present in the interaction model. When we set parameters to 0 in models it removes them from the model. Setting parameters to 0 is also how we will develop our hypotheses to test for an interaction, by assessing evidence against a null hypothesis that all \(\omega_{jk}\text{'s} = 0\).

The interaction test hypotheses are

\(H_0\): No interaction between A and B on response in population \(\Leftrightarrow\) All \(\omega_{jk}\text{'s} = 0\).

\(H_A\): Interaction between A and B on response in population \(\Leftrightarrow\) At least one \(\omega_{jk}\ne 0\).

To perform this test, a new ANOVA \(F\)-test is required (presented below) but there are also hypotheses relating to the main effects of A (\(\tau_j\text{'s}\)) and B (\(\gamma_k\text{'s}\)). If you decide that there is sufficient evidence against the null hypothesis that no interaction is present to conclude that one is likely present, then it is dangerous to ignore the interaction and test for the main effects because important main effects can be masked by interactions (examples later). It is important to note that, by definition, both variables matter if an interaction is found to be important so the main effect tests may not be very interesting in an interaction model. If the interaction is found to be important based on the test and so is retained in the model, you should focus on the interaction model (also called the full model) in order to understand and describe the form of the interaction among the variables.

If the interaction test does not return a small p-value and you decide that you do not have enough evidence against the null hypothesis to suggest that the interaction is needed, the interaction can be dropped from the model. In this situation, we would re-fit the model and focus on the results provided by the additive model – performing tests for the two additive main effects. For the first, but not last time, we encounter a model with more than one variable and more than one test of potential interest. In models with multiple variables at similar levels (here both are main effects), we are interested in the results for each variable given that the other variable is in the model. In many situations, including more than one variable in a model changes the results for the other variable even if those variables do not interact. The reason for this is more clear in Chapter 8 and really only matters here if we have unbalanced designs, but we need to start adding a short modifier to our discussions of main effects – they are the results conditional on or adjusting for or, simply, given, the other variable(s) in the model. Specifically, the hypotheses for the two main effects are:

Main effect test for A:

\(H_0\): No differences in means across levels of A in population, given B in the model

\(\Leftrightarrow\) All \(\tau_j\text{'s} = 0\) in additive model.

\(H_A\): Some difference in means across levels A in population, given B in the model

\(\Leftrightarrow\) At least one \(\tau_j \ne 0\), in additive model.

Main effect test for B:

\(H_0\): No differences in means across levels of B in population, given A in the model

\(\Leftrightarrow\) All \(\gamma_k\text{'s} = 0\) in additive model.

\(H_A\): Some difference in means across levels B in population, given A in the model

\(\Leftrightarrow\) At least one \(\gamma_k \ne 0\), in additive model.

In order to test these effects (interaction in the interaction model and main effects in the additive model), \(F\)-tests are developed using Sums of Squares, Mean Squares, and degrees of freedom similar to those in Chapter 3. We won’t worry about the details of the sums of squares formulas but you should remember the sums of squares decomposition, which still applies84. Table 4.1 summarizes the ANOVA results you will obtain for the interaction model and Table 4.2 provides the similar general results for the additive model. As we saw in Chapter 3, the degrees of freedom are the amount of information that is free to vary at a particular level and that rule generally holds here. For example, for factor A with \(J\) levels, there are \(J-1\) parameters that are free since the baseline is fixed. The residual degrees of freedom for both models are not as easily explained but have a simple formula. Note that the sum of the degrees of freedom from the main effects, (interaction if present), and error need to equal \(N-1\), just like in the One-Way ANOVA table.

| Source | DF | SS | MS | F-statistics |

|---|---|---|---|---|

| A | \(J-1\) | \(\text{SS}_A\) | \(\text{MS}_A = \text{SS}_A/\text{df}_A\) | \(\text{MS}_A/\text{MS}_E\) |

| B | \(K-1\) | \(\text{SS}_B\) | \(\text{MS}_B = \text{SS}_B/\text{df}_B\) | \(\text{MS}_B/\text{MS}_E\) |

| A:B (interaction) | \((J-1)(K-1)\) | \(\text{SS}_{AB}\) | \(\text{MS}_{AB} = \text{SS}_{AB}/\text{df}_{AB}\) | \(\text{MS}_{AB}/\text{MS}_E\) |

| Error | \(N-JK\) | \(\text{SS}_E\) | \(\text{MS}_E = \text{SS}_E/\text{df}_E\) | |

| Total | \(\color{red}{\mathbf{N-1}}\) | \(\color{red}{\textbf{SS}_{\textbf{Total}}}\) |

| Source | DF | SS | MS | F-statistics |

|---|---|---|---|---|

| A | \(J-1\) | \(\text{SS}_A\) | \(\text{MS}_A = \text{SS}_A/\text{df}_A\) | \(\text{MS}_A/\text{MS}_E\) |

| B | \(K-1\) | \(\text{SS}_B\) | \(\text{MS}_B = \text{SS}_B/\text{df}_B\) | \(\text{MS}_B/\text{MS}_E\) |

| Error | \(N-J-K+1\) | \(\text{SS}_E\) | \(\text{MS}_E = \text{SS}_E/\text{df}_E\) | |

| Total | \(\color{red}{\mathbf{N-1}}\) | \(\color{red}{\textbf{SS}_{\textbf{Total}}}\) |

The mean squares are formed by taking the sums of squares (we’ll let R find those

for us) and dividing by the \(df\) in the row.

The \(F\)-ratios are found by taking

the mean squares from the row and dividing by the mean squared error (\(\text{MS}_E\)).

They follow \(F\)-distributions with numerator degrees

of freedom from the row and denominator degrees of freedom from the Error row

(in R output this the Residuals row).

It is possible to develop permutation

tests for these methods but some

technical issues arise in doing permutation tests for interaction model components

so we will not use them here. This means we will have to place even more

emphasis on the data not presenting clear violations of assumptions since we only have the parametric method

available.

With some basic expectations about the ANOVA tables and \(F\)-statistic construction

in mind, we can get to actually estimating the models and exploring the results.

The first example involves the fake paper towel data

displayed in Figure 4.1 and 4.2. It appeared

that Scenario 5 was the correct

story since the lines appeared to be non-parallel, but we need to know whether there is

sufficient evidence to suggest that the interaction is “real” and we get that through the

interaction hypothesis test. To fit the interaction model using lm,

the general formulation is lm(y ~ x1 * x2, data = ...). The

order of the variables doesn’t matter as the most important part of the model,

to start with, relates to the interaction of the variables.

The ANOVA table output

shows the results for the interaction model obtained by running the anova

function on the model called m1.

Specifically, the test that

\(H_0: \text{ All } \omega_{jk}\text{'s} = 0\) has a

test statistic of \(F(2,24) = 1.92\) (in the output from the row with

brands:drops) and a p-value of 0.17. So there is weak evidence against the null hypothesis of no interaction, with a 17% chance we would

observe a difference in the \(\omega_{jk}\text{'s}\) like we did or more

extreme if the \(\omega_{jk}\text{'s}\) really were all 0. So we would conclude that the interaction is probably not needed85. Note that for the interaction

model components, R presents them with a colon, :, between the variable

names.

m1 <- lm(responses ~ brand * drops, data = pt)

anova(m1)## Analysis of Variance Table

##

## Response: responses

## Df Sum Sq Mean Sq F value Pr(>F)

## brand 1 4.3322 4.3322 10.5192 0.003458

## drops 2 4.8581 2.4290 5.8981 0.008251

## brand:drops 2 1.5801 0.7901 1.9184 0.168695

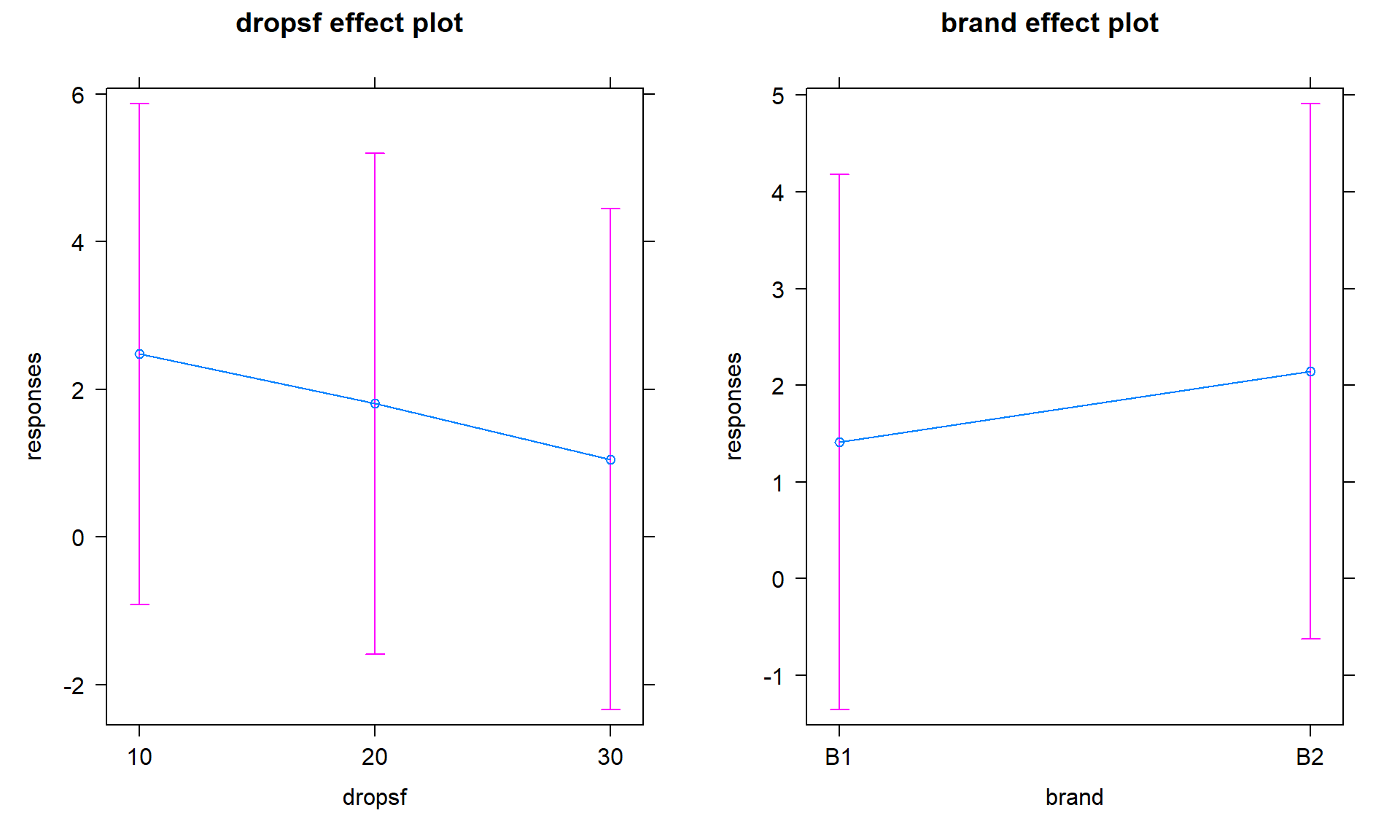

## Residuals 24 9.8840 0.4118It is useful to display the estimates from this model and we can utilize

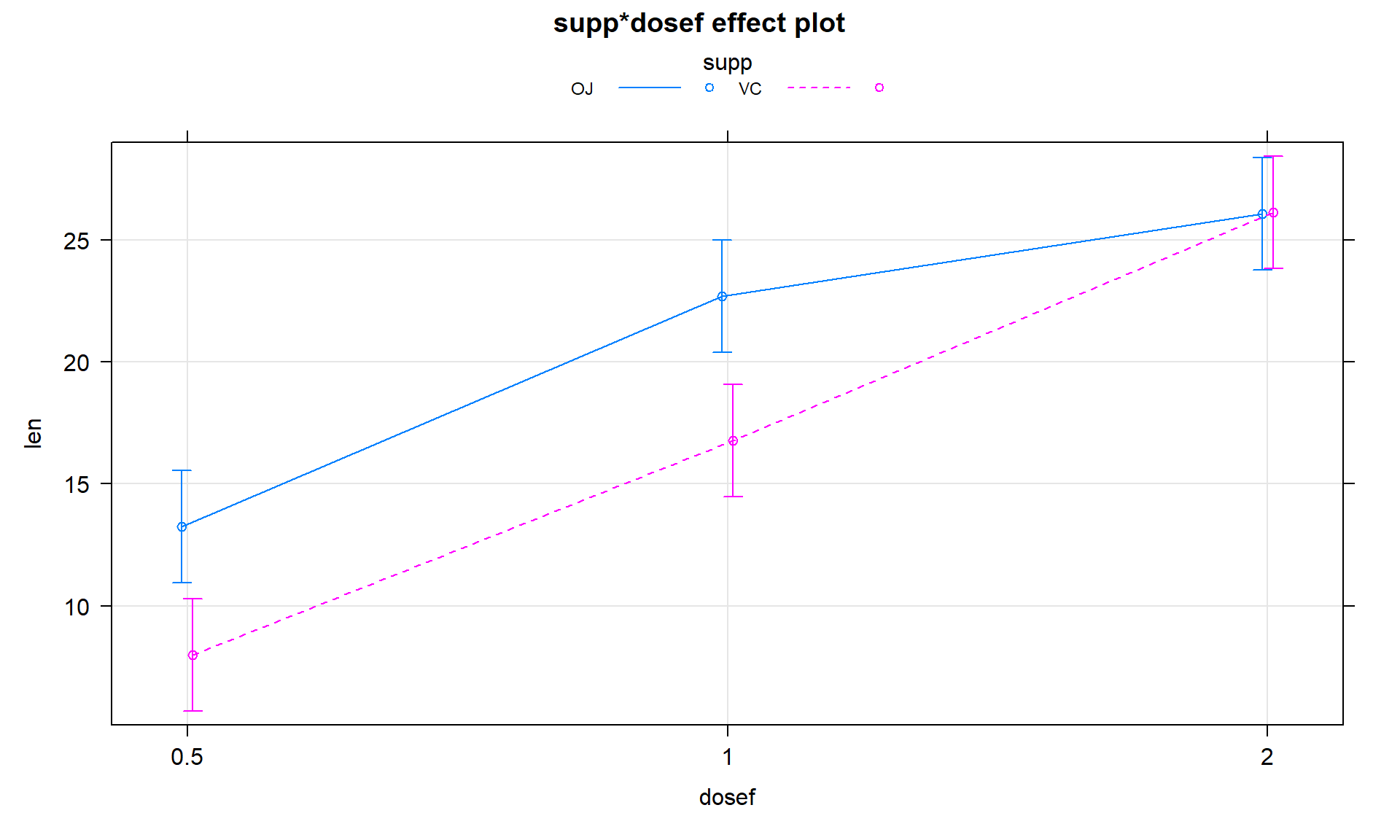

plot(allEffects(MODELNAME)) to visualize the results for the terms

in our models. If we turn on the options for grid = T, multiline = T,

and ci.style = "bars" we get a useful version of the basic

“effect plot” for Two-Way ANOVA

models with interaction. I also added lty = c(1:2) to change the line type for the two lines (replace 2 with the number of levels in the variable driving the different lines. The results of the estimated interaction model are

displayed in Figure 4.7, which looks very similar to our

previous interaction plot. The only difference is that this comes from model

that assumes equal variance and these plots show 95% confidence intervals

for the means instead of the \(\pm\) 1 SE used in the intplot where each SE is calculated using the variance of the observations at each combination of levels. Note that other than the lines connecting the means, this plot also is similar to the pirate-plot in Figure 4.1 that also displayed the original responses for each of the six combinations of the two explanatory variables. That plot then provides a place to assess assumptions of the equal variance and distributions for each group as well as explore differences in the group means.

library(effects)

plot(allEffects(m1), grid = T, multiline = T, lty = c(1:2), ci.style = "bars")

Figure 4.7: Plot of estimated results of interaction model for the paper towel performance data.

In the absence of sufficient evidence to include the

interaction, the model should be simplified to the additive model and the interpretation

focused on each main effect, conditional on having the other variable in the

model. To fit an additive model and not include an interaction, the model

formula involves a “+” instead of a “*” between the explanatory variables.

m2 <- lm(responses ~ brand + drops, data = pt)

anova(m2)## Analysis of Variance Table

##

## Response: responses

## Df Sum Sq Mean Sq F value Pr(>F)

## brand 1 4.3322 4.3322 9.8251 0.004236

## drops 2 4.8581 2.4290 5.5089 0.010123

## Residuals 26 11.4641 0.4409The p-values for the main effects of brand and drops change slightly from the

results in the interaction model due to changes in the \(\text{MS}_E\) from

0.4118 to 0.4409 (more variability is left over in the simpler model) and the

\(\text{DF}_{\text{error}}\) that increases from 24 to 26. In both models, the

\(\text{SS}_{\text{Total}}\) is the same (20.6544). In the interaction model,

\[\begin{array}{rl} \text{SS}_{\text{Total}} & = \text{SS}_{\text{brand}} + \text{SS}_{\text{drops}} + \text{SS}_{\text{brand:drops}} + \text{SS}_{\text{E}}\\ & = 4.3322 + 4.8581 + 1.5801 + 9.8840\\ & = 20.6544.\\ \end{array}\]

In the additive model, the variability that was attributed to the interaction term in the interaction model (\(\text{SS}_{\text{brand:drops}} = 1.5801\)) is pushed into the \(\text{SS}_{\text{E}}\), which increases from 9.884 to 11.4641. The sums of squares decomposition in the additive model is

\[\begin{array}{rl} \text{SS}_{\text{Total}} & = \text{SS}_{\text{brand}} + \text{SS}_{\text{drops}} + \text{SS}_{\text{E}} \\ & = 4.3322 + 4.8581 + 11.4641 \\ & = 20.6544. \\ \end{array}\]

This shows that the sums of squares decomposition applies in these more complicated models as it did in the One-Way ANOVA. It also shows that if the interaction is removed from the model, that variability is lumped in with the other unexplained variability that goes in the \(\text{SS}_{\text{E}}\) in any model.

The fact that the sums of squares decomposition can be applied here is

useful, except that there is a small issue

with the main effect tests in the ANOVA table results that follow this

decomposition when the design is not balanced. It ends up that the tests in a

typical ANOVA table are only conditional on the tests higher up in the table. For

example, in the additive model ANOVA table, the Brand test is not

conditional on the Drops effect, but the Drops effect is conditional on the

Brand effect. In balanced designs, conditioning on the other variable does not change the results but in unbalanced designs, the order does matter. To get both results to be similarly conditional on the other variable, we have to use another type of sums of

squares, called Type II sums of squares.

These sums of squares will no longer always

follow the rules of the sums of squares decomposition but they

will test the desired hypotheses. Specifically, they provide each test

conditional on any other terms at the same level of the model and match the

hypotheses written out earlier in this section. To get the “correct” ANOVA

results, the car package (Fox, Weisberg, and Price (2022a), Fox and Weisberg (2011)) is required. We use the

Anova function on our linear models from here forward to get the “right”

tests in our ANOVA tables86.

Note how the case-sensitive nature of R code shows

up in the use of the capital “A” Anova function instead of the lower-case “a” anova

function used previously. In this situation, because the design was balanced, the

results are the same using either function. Observational studies rarely

generate balanced designs (some designed studies can result in unbalanced

designs too) so we will generally just use the Type II version of the sums of

squares to give us the desired results across different data sets we might analyze. The Anova results using the Type II sums of

squares are slightly more conservative than the results from anova,

which are called Type I sums of squares.

The sums of squares decomposition no

longer applies, but it is a small sacrifice to get each test after

adjusting for all other variables87.

library(car)

Anova(m2)## Anova Table (Type II tests)

##

## Response: responses

## Sum Sq Df F value Pr(>F)

## brand 4.3322 1 9.8251 0.004236

## drops 4.8581 2 5.5089 0.010123

## Residuals 11.4641 26The new output switches the columns around and doesn’t show you the mean squares, but gives the most critical parts of the output. Here, there is no change in results because it is a balanced design with equal counts of responses in each combination of the two explanatory variables.

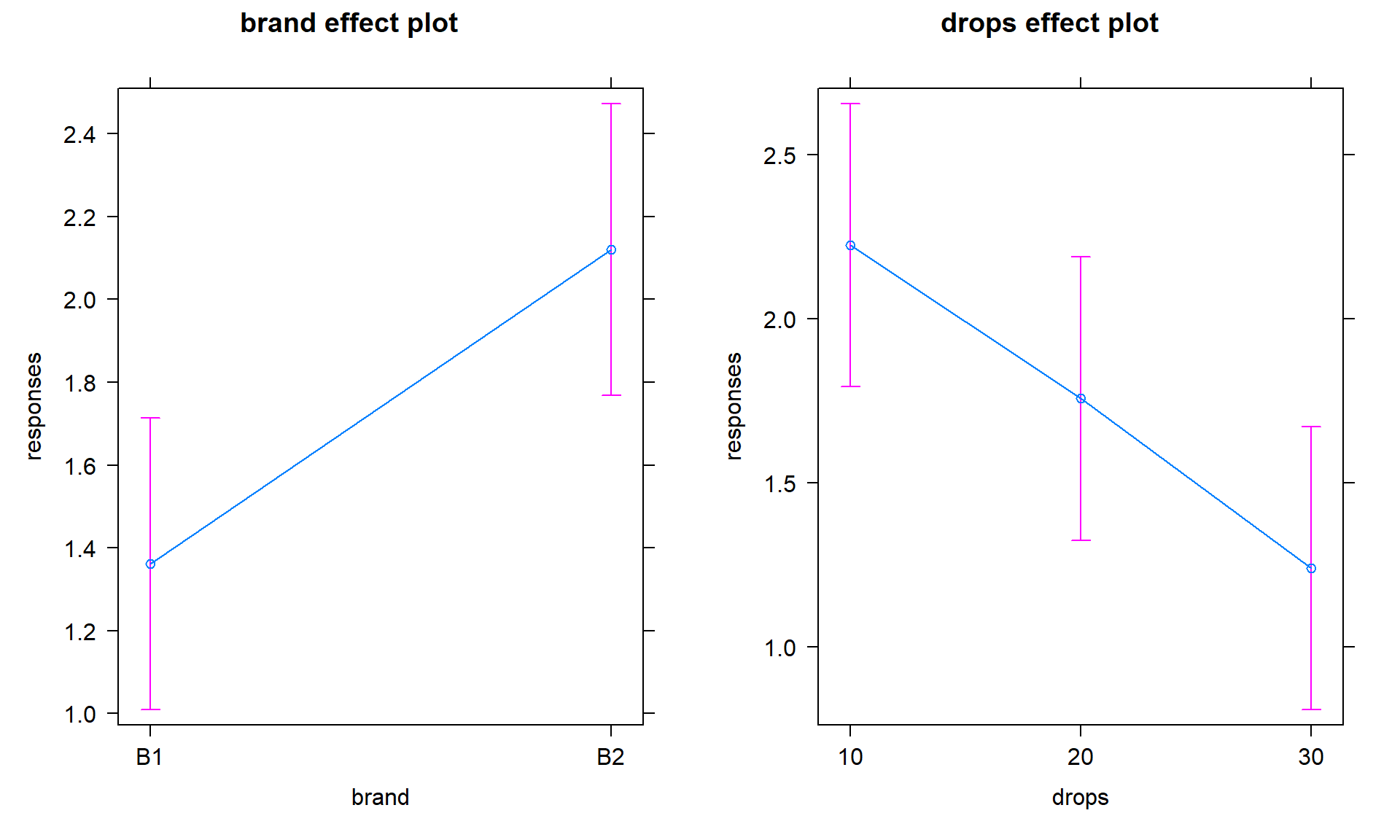

The additive model, when appropriate, provides simpler interpretations for each explanatory variable compared to models with interactions because the effect of one variable is the same regardless of the levels of the other variable and vice versa. There are two tools to aid in understanding the impacts of the two variables in the additive model. First, the model summary provides estimated coefficients with interpretations like those seen in Chapter 3 (deviation of group \(j\) or \(k\) from the baseline group’s mean), except with the additional wording of “controlling for” the other variable added to any of the discussion. Second, the term-plots now show each main effect and how the groups differ with one panel for each of the two explanatory variables in the model. These term-plots are created by holding the other variable constant at one of its levels (the most frequently occurring or first if the there are multiple groups tied for being most frequent) and presenting the estimated means across the levels of the variable in the plot.

summary(m2)##

## Call:

## lm(formula = responses ~ brand + drops, data = pt)

##

## Residuals:

## Min 1Q Median 3Q Max

## -1.4561 -0.4587 0.1297 0.4434 0.9695

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 1.8454 0.2425 7.611 4.45e-08

## brandB2 0.7600 0.2425 3.134 0.00424

## drops20 -0.4680 0.2970 -1.576 0.12715

## drops30 -0.9853 0.2970 -3.318 0.00269

##

## Residual standard error: 0.664 on 26 degrees of freedom

## Multiple R-squared: 0.445, Adjusted R-squared: 0.3809

## F-statistic: 6.948 on 3 and 26 DF, p-value: 0.001381In the model summary, the baseline combination estimated in the (Intercept)

row is for Brand B1 and Drops 10 and estimates the mean failure

time as 1.85 seconds for this combination. As

before, the group labels that do not show up are the baseline but there are two

variables’ baselines to identify. Now the “simple” aspects of the additive

model show up. The interpretation of the Brands B2 coefficient is as

a deviation from the baseline but it applies regardless of the level of

Drops. Any difference between B1 and B2 involves a shift up of 0.76 seconds

in the estimated mean failure time. Similarly, going from 10 (baseline) to 20

drops results in a drop in the estimated failure mean of 0.47 seconds and going

from 10 to 30 drops results in a drop of almost 1 second in the average time to

failure, both estimated changes are the same regardless of the brand of paper

towel being considered. Sometimes, especially in observational studies, we use

the terminology “controlled for” to remind the reader that the other variable

was present in the model88 and also explained some of the variability in the

responses. The term-plots for

the additive model (Figure 4.8) help us visualize the

impacts of changes brand and changing water levels, holding the other

variable constant. The differences in heights in each panel correspond

to the coefficients just discussed.

library(effects)

plot(allEffects(m2))

Figure 4.8: Term-plots of additive model for paper towel data. Left panel displays results for two brands and right panel for number of drops of water, each after controlling for the other.

With the first additive model we have considered, it is now the first time where we are working with a model where we can’t display the observations together with the means that the model is producing because the results for each predictor are averaged across the levels of the other predictor. To visualize some aspects of the original observations with the estimates from each group, we can turn on an option in the term-plots (residuals = T) to obtain the partial residuals that show the residuals as a function of one variable after adjusting for the effects/impacts of other variables. We will avoid the specifics of the calculations for now, but you can use these to explore the residuals at different levels of each predictor. They will be most useful in the Chapters 7 and 8 but give us some insights in unexplained variation in each level of the predictors once we remove the impacts of other predictors in the model. Use plots like Figure 4.9 to look for different variability at different levels of the predictors and locations of possible outliers in these models. Note that the points (open circles) are jittered to aid in seeing all of them, the means of each group of residuals are indicated by a filled large circle, and the smaller circles in the center of the bars for the 95% confidence intervals are the means from the model. Term-plots with partial residuals accompany our regular diagnostic plots for assessing equal variance assumptions in these models – in some cases adding the residuals will clutter the term-plots so much that reporting them is not useful since one of the main purposes of the term-plots is to visualize the model estimates. So use the residuals = T option judiciously.

library(effects)

plot(allEffects(m2, residuals = T))

Figure 4.9: Term-plots of additive model for paper towel data with partial residuals added. Relatively similar variability seems to be present in each of the groups of residuals after adjusting for the other variable except for the residuals for the 10 drops where the variability is smaller, especially if one small outlier is ignored.

For the One-Way and Two-Way interaction models, the partial residuals are just the original observations so present similar information as the pirate-plots but do show the model estimated 95% confidence intervals. With interaction models, you can use the default settings in effects when adding in the partial residuals as seen below in Figure 4.12.

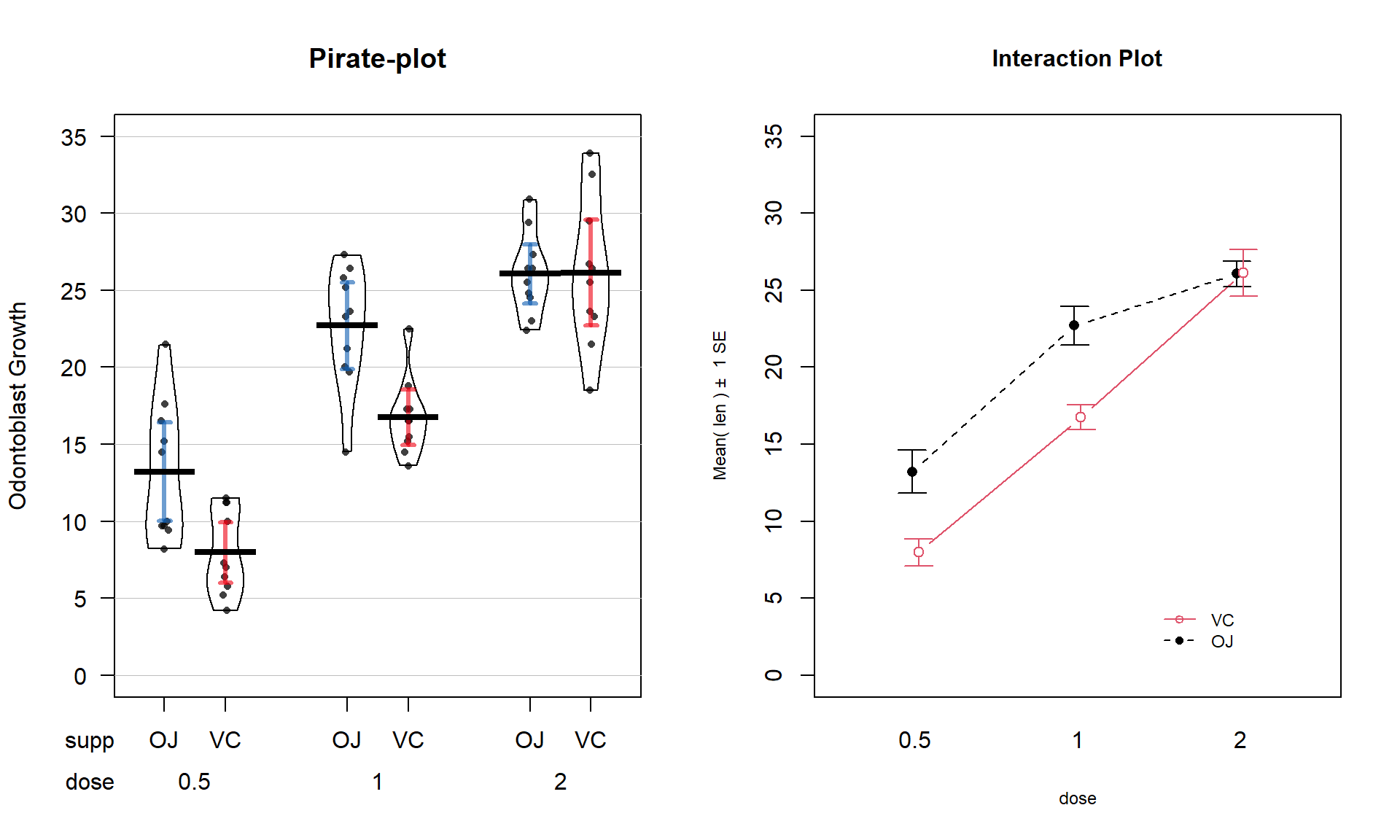

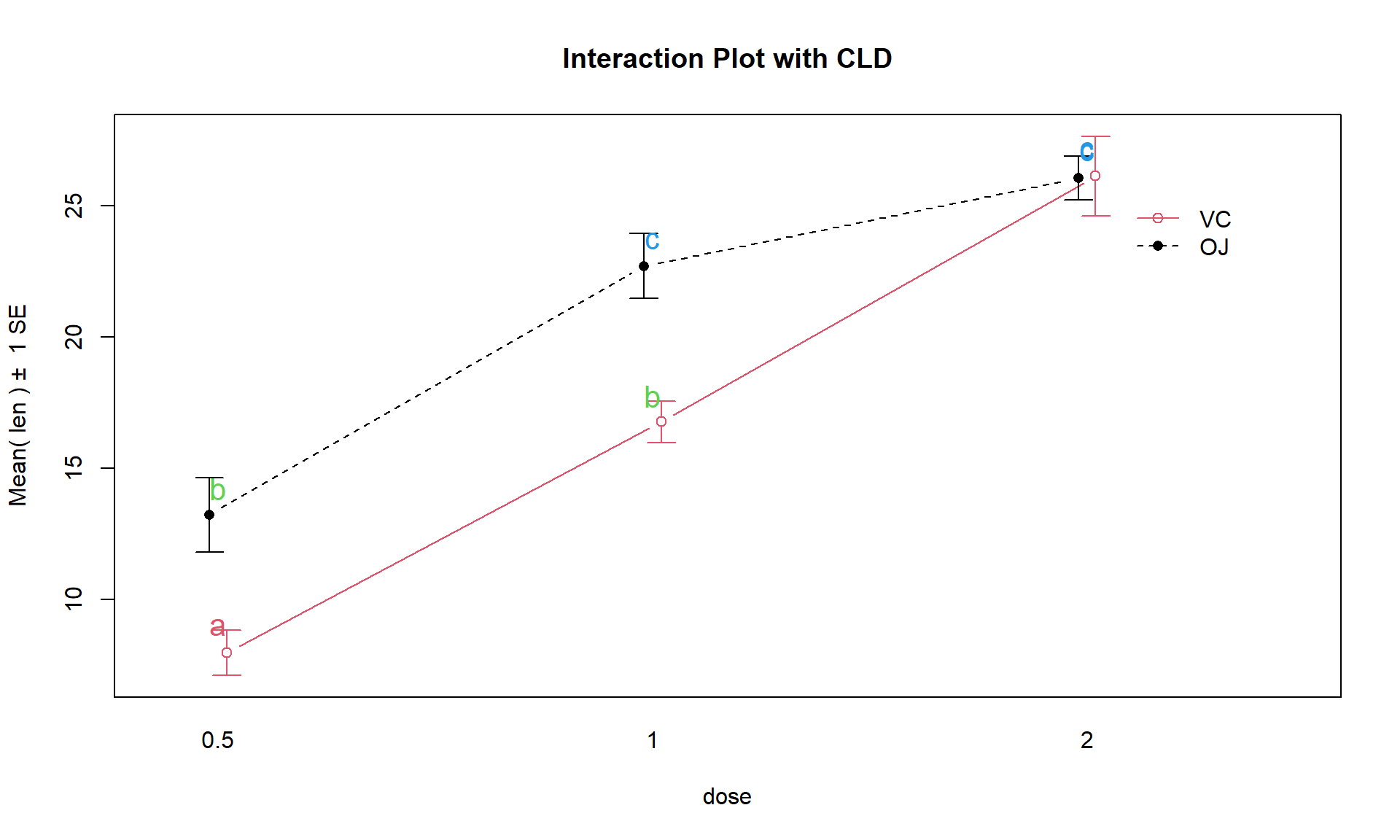

4.4 Guinea pig tooth growth analysis with Two-Way ANOVA

The effects of dosage and delivery method of ascorbic acid on Guinea Pig odontoblast growth was analyzed as a One-Way ANOVA in Section 3.5 by assessing evidence of any difference in the means of any of the six combinations of dosage method (Vit C capsule vs Orange Juice) and three dosage amounts (0.5, 1, and 2 mg/day). Now we will consider the dosage and delivery methods as two separate variables and explore their potential interaction. A pirate-plot and interaction plot are provided in Figure 4.10.

Figure 4.10: Pirate-plot and interaction plot of the odontoblast growth data set.

data(ToothGrowth)

library(tibble)

ToothGrowth <- as_tibble(ToothGrowth)par(mfrow = c(1,2))

pirateplot(len ~ supp * dose, data = ToothGrowth, ylim = c(0,35),

main = "Pirate-plot", xlab = "Dosage", ylab = "Odontoblast Growth",

inf.method = "ci", inf.disp = "line", theme = 2)

intplot(len ~ supp * dose, data = ToothGrowth, col = c(1,2),

main = "Interaction Plot", ylim = c(0,35))It appears that the effect of method changes based on the dosage as the interaction plot seems to show some evidence of non-parallel lines. Actually, it appears that the effect of delivery method is the same (parallel lines) for doses 0.5 and 1.0 mg/day but that the effect of delivery method changes for 2 mg/day.

We can use the ANOVA \(F\)-test for an interaction to assess whether we think the interaction is “real” relative to the variability in the responses. That is, is it larger than we would expect due to natural variation in the data? If yes, then we think it is a real effect and we should account for it. The following code fits the interaction model and provides an ANOVA table.

TG1 <- lm(len ~ supp * dose, data = ToothGrowth)

Anova(TG1)## Anova Table (Type II tests)

##

## Response: len

## Sum Sq Df F value Pr(>F)

## supp 205.35 1 12.3170 0.0008936

## dose 2224.30 1 133.4151 < 2.2e-16

## supp:dose 88.92 1 5.3335 0.0246314

## Residuals 933.63 56The R output is reporting an interaction test result of \(F(1,56) = 5.3\) with

a p-value of 0.025. But this should raise a red flag since the numerator

degrees of freedom are not what we should expect based on Table 4.1 of \((K-1)*(J-1) = (2-1)*(3-1) = 2\).

This brings up an issue in R when working

with categorical variables. If the levels of a categorical variable are entered

numerically, R will treat them as quantitative variables and not split out the

different levels of the categorical variable. To make sure that R treats

categorical variables the correct way, we should use the factor

function on any variables89 that are categorical in meaning but are coded numerically in the data set. The following code creates a new variable called dosef

using mutate and the factor function to help us obtain correct results from the linear

model.

The re-run of the ANOVA table provides the correct analysis and the

expected \(df\) for the two rows of output involving dosef:

ToothGrowth <- ToothGrowth %>% mutate(dosef = factor(dose))

TG2 <- lm(len ~ supp * dosef, data = ToothGrowth)

Anova(TG2)## Anova Table (Type II tests)

##

## Response: len

## Sum Sq Df F value Pr(>F)

## supp 205.35 1 15.572 0.0002312

## dosef 2426.43 2 92.000 < 2.2e-16

## supp:dosef 108.32 2 4.107 0.0218603

## Residuals 712.11 54The ANOVA \(F\)-test for an interaction between supplement type and dosage level is \(F(2,54) = 4.107\) with a p-value of 0.022. So there is moderate to strong evidence against the null hypothesis of no interaction between Dosage and Delivery method, so we would likely conclude that there is an interaction present that we should discuss and this supports a changing effect on odontoblast growth of dosage based on the delivery method in these guinea pigs.

Any similarities between this correct result and the previous WRONG result are coincidence. I once attended a Master’s thesis defense where the results from a similar model were not as expected (small p-values in places they didn’t expect and large p-values in places where they thought differences existed based on past results and plots of the data). During the presentation, the student showed some ANOVA tables and the four level categorical variable had 1 numerator \(df\) in all ANOVA tables. The student passed with major revisions but had to re-run all the results and re-write all the conclusions… So be careful to check the ANOVA results (\(df\) and for the right number of expected model coefficients) to make sure they match your expectations. This is one reason why you will be learning to fill in ANOVA tables based on information about the study so that you can be prepared to detect when your code has let you down90. It is also a great reason to explore term-plots and coefficient interpretations as that can also help diagnose errors in model construction.

Getting back to the previous results, we now have enough background information to more formally write up a focused interpretation of these results. The 6+ hypothesis testing steps in this situation would be focused on first identifying that the best analysis here is as a Two-Way ANOVA situation (these data were analyzed in Chapter 3 as a One-Way ANOVA but this version is likely better because it can explore whether there is an interaction between delivery method and dosage). We will focus on assessing the interaction. If the interaction had been dropped, we would have reported the test results for the interaction, then re-fit the additive model and used it to explore the main effect tests and estimates for Dose and Delivery method. But since we are inclined to retain the interaction component in the model, the steps focus on the interaction.

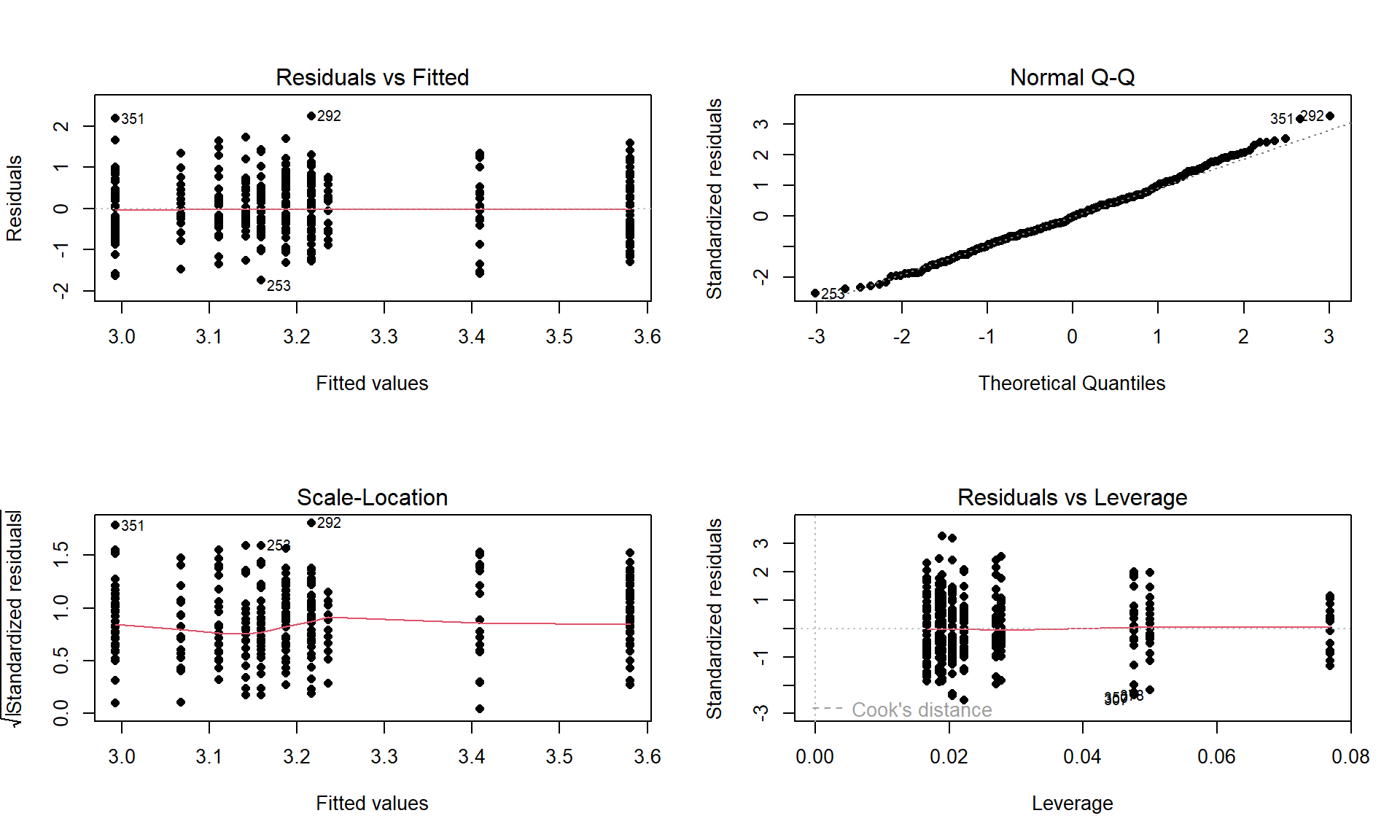

par(mfrow = c(2,2))

plot(TG2, pch = 16)

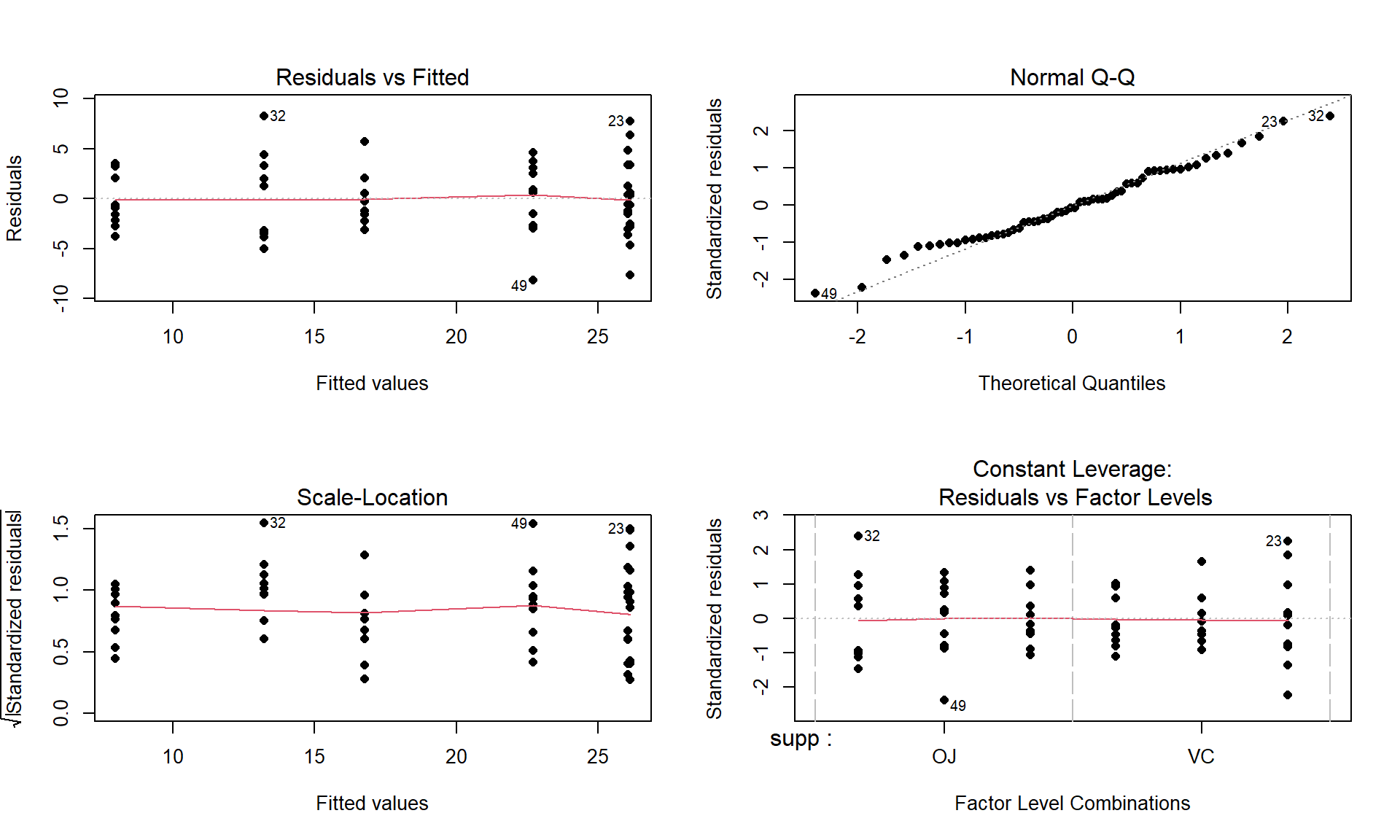

Figure 4.11: Diagnostic plots for the interaction model for odontoblast growth interaction model.

The RQ is whether there is an interaction of dosage and delivery method on odontoblast growth. Data were collected at all combinations of these predictor variables on the size of the cells, so they can address the size of the cells in these condition combinations. The interaction \(F\)-test will be used to assess the research question.

Hypotheses:

\(H_0\): No interaction between Delivery method and Dose on odontoblast growth in population of guinea pigs

\(\Leftrightarrow\) All \(\omega_{jk}\text{'s} = 0\).

\(H_A\): Interaction between Delivery method and Dose on odontoblast growth in population of guinea pigs

\(\Leftrightarrow\) At least one \(\omega_{jk}\ne 0\).

Plot the data and assess validity conditions:

Independence:

- There is no indication of an issue with this assumption because we don’t know of a reason why the independence of the measurements of odontoblast growth of across the guinea pigs as studied might be violated.

Constant variance:

To assess this assumption, we can use the pirate-plot in Figure 4.10, the diagnostic plots in Figure 4.11, and by adding the partial residuals to the term-plot91 as shown in 4.12.

In the Residuals vs Fitted and the Scale-Location plots, the differences in variability among the groups (see the different x-axis positions for each group’s fitted values) is minor, so there is not strong evidence of a problem with the equal variance assumption. Similarly, the original pirate-plots and adding the partial residuals to the term-plot do not highlight big differences in variability at any of the combinations of the predictors, so do not suggest clear issues with this assumption.

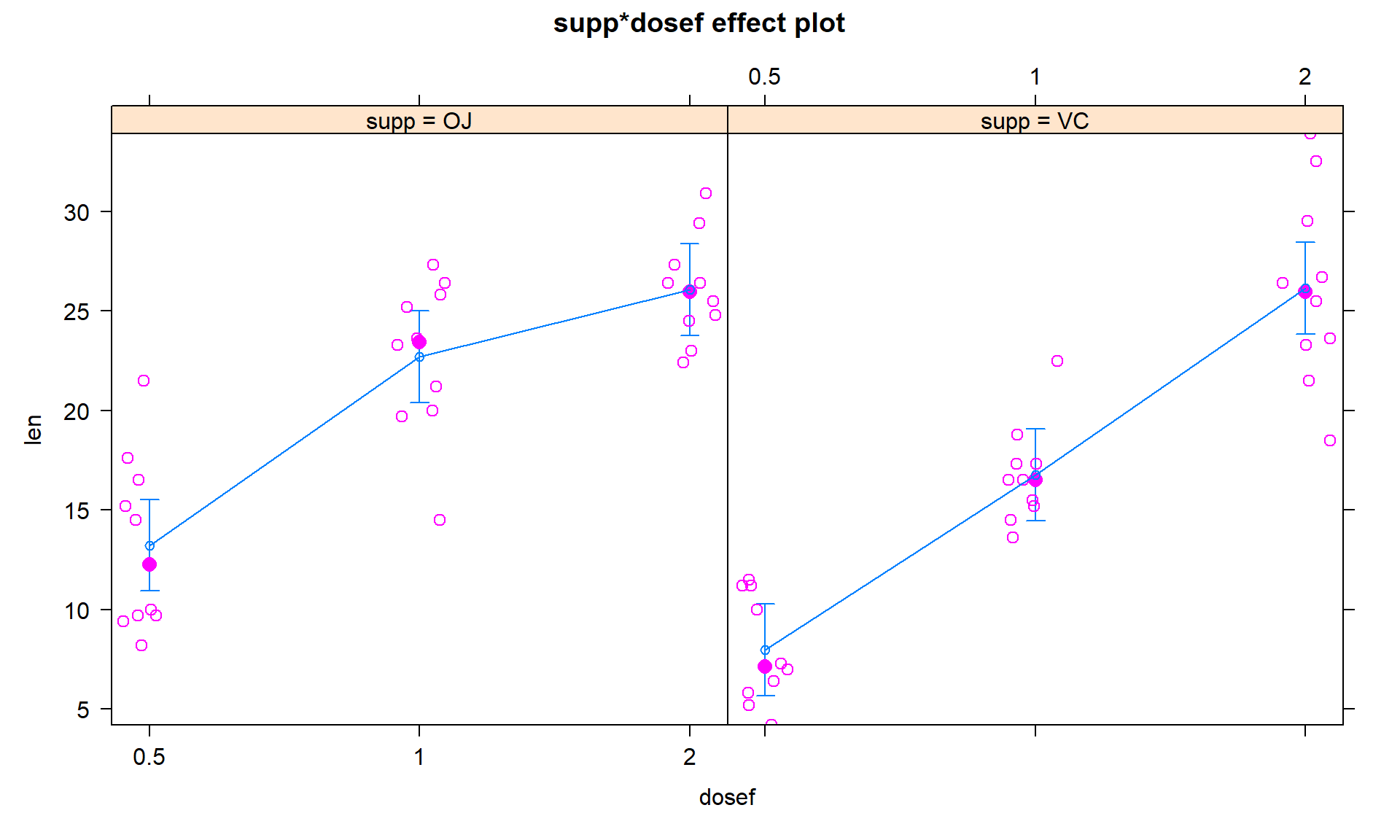

plot(allEffects(TG2, residuals = T, x.var = "dosef"))

Figure 4.12: Term-plot for odontoblast growth interaction model with partial residuals added.

Normality of residuals:

- The QQ-Plot in Figure 4.11 does not suggest a problem with this assumption.

Note that these diagnostics and conclusions are the same as in Section 3.5 because the interaction model and the One-Way ANOVA model with all six combinations of the levels of the two variables fit exactly the same. But the RQ that we can address differs due to the different model parameterizations.

Calculate the test statistic and p-value for the interaction test.

TG2 <- lm(len ~ supp * dosef, data = ToothGrowth) Anova(TG2)## Anova Table (Type II tests) ## ## Response: len ## Sum Sq Df F value Pr(>F) ## supp 205.35 1 15.572 0.0002312 ## dosef 2426.43 2 92.000 < 2.2e-16 ## supp:dosef 108.32 2 4.107 0.0218603 ## Residuals 712.11 54The test statistic is \(F(2,54) = 4.107\) with a p-value of 0.0219

To find this p-value directly in R from the test statistic value and \(F\)-distribution, we can use the

pffunction.

pf(4.107, df1 = 2, df2 = 54, lower.tail = F)## [1] 0.0218601

Conclusion based on p-value:

- With a p-value of 0.0219 (from \(F(2,54) = 4.107\)), there is about a 2.19% chance we would observe an interaction like we did (or more extreme) if none were truly present. This provides moderate to strong evidence against the null hypothesis of no interaction between delivery method and dosage on odontoblast growth in the population so we would conclude that there is likely an interaction and would retain the interaction in the model.

Size of differences:

- See discussion below.

Scope of Inference:

- Based on the random assignment of treatment levels, causal inference is possible (the changes due to dosage in the differences based on supplement type caused the differences in growth) but because the guinea pigs were not randomly selected, the inferences only pertain to these guinea pigs.

In a Two-Way ANOVA, we need to go a little further to get to the final “size” interpretations since the models are more complicated. When there is an interaction present, we should focus on the term-plot of the interaction model for an interpretation of the form and pattern of the interaction. If the interaction were unimportant, then the hypotheses and results should focus on the additive model results, especially the estimated model coefficients. To see why we don’t usually discuss all the estimated model coefficients in an interaction model, the six coefficients for this model are provided:

summary(TG2)$coefficients## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 13.23 1.148353 11.5208468 3.602548e-16

## suppVC -5.25 1.624017 -3.2327258 2.092470e-03

## dosef1 9.47 1.624017 5.8312215 3.175641e-07

## dosef2 12.83 1.624017 7.9001660 1.429712e-10

## suppVC:dosef1 -0.68 2.296706 -0.2960762 7.683076e-01

## suppVC:dosef2 5.33 2.296706 2.3207148 2.410826e-02There are two \(\widehat{\omega}_{jk}\text{'s}\) in the results, related to modifying the estimates for doses of 1 (-0.68) and 2 (5.33) for the Vitamin C group. If you want to re-construct the fitted values from the model that are displayed in Figure 4.13, you have to look for any coefficients that are “turned on” for a combination of levels of interest. For example, for the OJ group (solid line), the dosage of 0.5 mg/day has an estimate of an average growth of approximately 13 mm. This is the baseline group, so the model estimate for an observation in the OJ and 0.5 mg/day dosage is simply \(\widehat{y}_{i,\text{OJ},0.5mg} = \widehat{\alpha} = 13.23\) microns. For the OJ and 2 mg/day dosage estimate that has a value over 25 microns in the plot, the model incorporates the deviation for the 2 mg/day dosage: \(\widehat{y}_{i,\text{OJ},2mg} = \widehat{\alpha} + \widehat{\tau}_{2mg} = 13.23 + 12.83 = 26.06\) microns. For the Vitamin C group, another coefficient becomes involved from its “main effect”. For the VC and 0.5 mg dosage level, the estimate is approximately 8 microns. The pertinent model components are \(\widehat{y}_{i,\text{VC},0.5mg} = \widehat{\alpha} + \widehat{\gamma}_{\text{VC}} = 13.23 + (-5.25) = 7.98\) microns. Finally, when we consider non-baseline results for both groups, three coefficients are required to reconstruct the results in the plot. For example, the estimate for the VC, 1 mg dosage is \(\widehat{y}_{i,\text{VC},1mg} = \widehat{\alpha} + \widehat{\tau}_{1mg} + \widehat{\gamma}_{\text{VC}} + \widehat{\omega}_{\text{VC},1mg} = 13.23 + 9.47 + (-5.25) +(-0.68) = 16.77\) microns. We usually will by-pass all this fun(!) with the coefficients in an interaction model and go from the ANOVA interaction test to focusing on the pattern of the responses in the interaction plot or going to the simpler additive model, but it is good to know that there are still model coefficients driving our results even if there are too many to be easily interpreted.

Figure 4.13: Term-plot for the estimated interaction for the Odontoblast Growth data using the multiline = T and ci.style = "bars" options.

Figure 4.14: Interaction plot for Odontoblast data with added CLD from Tukey’s HSD.

plot(allEffects(TG2), grid = T, multiline = T, lty = c(1:2), ci.style = "bars")Given the presence of an important

interaction, then the final step in the interpretation here is to interpret the

results in the interaction plot or term-plot of the interaction model,

supported by the p-value suggesting a different effect of

supplement type based on the dosage level. To supplement this even more,

knowing which combinations of levels differ can enhance our discussion. Tukey’s

HSD results (specifically the CLD) can be added to the original interaction

plot by turning on the cld = T option in the intplot function as seen in

Figure 4.14. Sometimes it is hard to see the letters and so

there is also a cldshift = ... option to move the letters up or down; here a

value of 1 seemed to work.

intplot(len ~ supp * dose, data = ToothGrowth, col = c(1,2), cldshift = 1,

cld = T, main = "Interaction Plot with CLD")The “size” interpretation of the previous hypothesis test result could be something like the following: Generally increasing the dosage increases the mean growth except for the 2 mg/day dosage level where the increase levels off in the OJ group (OJ 1 and 2 mg/day are not detectably different) and the differences between the two delivery methods disappear at the highest dosage level. But for 0.5 and 1 mg/day dosages, OJ is clearly better than VC by about 10 microns of growth on average.

4.5 Observational study example: The Psychology of Debt

In this section, the analysis of a survey of \(N = 464\) randomly sampled

adults will be analyzed from a survey conducted by Lea, Webley, and Walker (1995)

and available in the debt data set from the faraway package

(Faraway 2016).

The subjects responded to a variety of questions

including whether they buy cigarettes (cigbuy: 0 if

no, 1 if yes), their housing situation (house: 1 = rent, 2 = mortgage,

and 3 = owned outright), their income group (incomegp: 1 =

lowest, 5 = highest), and their score on a continuous scale of attitudes about

debt (prodebt: 1 = least favorable, 6 = most favorable). The variable prodebt

was derived as the average of a series of questions about debt with each

question measured on an ordinal 1 to 6 scale, with higher values

corresponding to more positive responses about \(\underline{\text{going into debt}}\)

of various kinds. The ordered scale on surveys that try to elicit your opinions on

topics with scales from 1 to 5, 1 to 6, 1 to 7 or even, sometimes, 1 to 10 is called

a Likert scale (Likert 1932). It is not a quantitative scale and really

should be handled more carefully than taking an average of a set responses as was done here. That

said, it is extremely common practice in social science research to treat ordinal

responses as if they are quantitative and take the average of many of them to

create a more continuous response variable like the one we are using here. If

you continue your statistics explorations, you will see some better techniques

for analyzing ordinal responses. That said, the scale of the

response is relatively easy to understand as an amount of willingness to go

into debt on a scale from 1 to 6 with higher values corresponding to more

willingness to be in debt.

These data are typical of survey data where respondents were not required

to answer all questions and there are some

missing responses. We could clean out any individuals that failed to respond to

all questions (called “complete cases”) using the drop_na function, which will return responses only for subjects

that responded to every question in

the data set, debt. The change in sample size is available by running the dim function on the two data sets – there were \(464\) observations (rows) initially along with \(13\) variables (columns) and once observations with any missing values were dropped there are \(N = 304\) for us to analyze. Losing 35% of the observations is a pretty noticeable loss.

library(faraway)

data(debt)

library(tibble)

debt <- as_tibble(debt) %>% mutate(incomegp = factor(incomegp),

cigbuy = factor(cigbuy)

)

debtc <- debt %>% drop_na()

dim(debt)## [1] 464 13dim(debtc)## [1] 304 13Using drop_na() with a list a variable names, we can focus on the three variables we are using in this model and whether the responses are missing on them, only cleaning out rows that are missing on incomegp, cigbuy, and/or prodebt92. The missingness is less dramatic, retaining \(N = 388\) observations in debtRc for our analysis using these three variables.

# Remove rows with missing values based on just three variables.

debtRc <- debt %>% drop_na(incomegp, cigbuy, prodebt)

dim(debtRc)## [1] 388 13The second approach seems better as it drops fewer observations so we will use that below. But suppose that people did not want to

provide their income levels if they were in the lowest or highest

income groups and that is why they are missing. Then we would be missing responses systematically and conclusions

could be biased because of ignoring particular types of subjects. We don’t have particular statistical tools to easily handle this problem but every researcher should worry about non-response when selected subjects do not respond at

all or fail to answer some questions. When the missing values are systematic in some fashion and not just missing randomly (missing randomly might be thought of as caused by “demonic intrusion” (Hurlbert 1984) that can’t be easily explained or related to the types of responses), then we worry about non-response bias that is systematically biasing our results because of the missing responses. This also ties back into our discussion of who was sampled. We need to

think carefully about who was part of the sample but refused to participate and

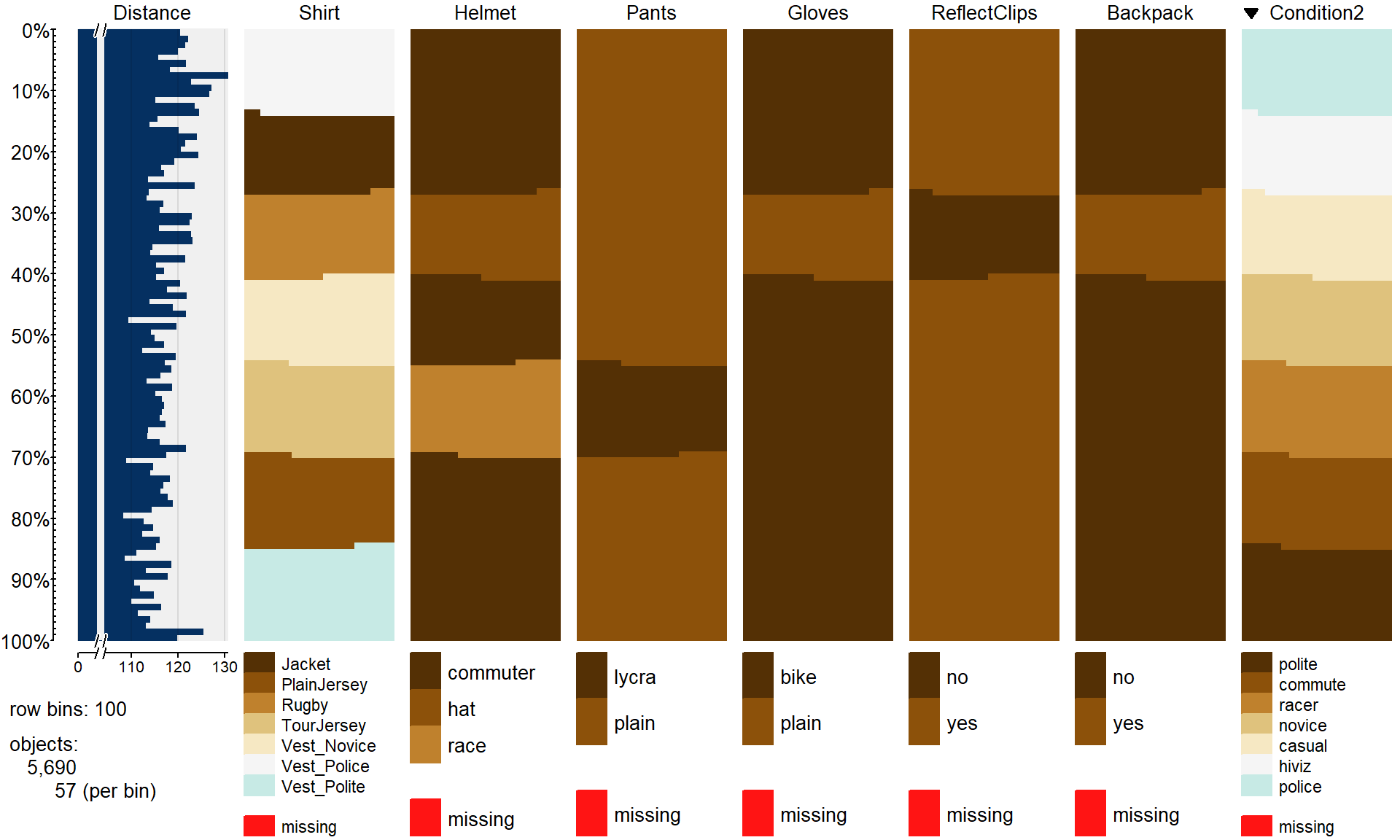

how that might impact our inferences. And whether we can even address the research question of interest based on what was measured given those that refused/failed to respond. For example, suppose we are studying river flows and are interested in the height of a river. Missingness in these responses could arise because a battery fails or the data logger “crashes” (not related to the responses and so not definitely problematic) or because of something about the measurements to be taken that causes the missingness (suppose the gage can only can measure between one and three feet deep and the river is running at four feet deep during a flood or below 1 foot during a drought). The first machine failures are very different from the height-based missing responses; the height-based missingness clearly leads to bias in estimating mean river height because of what can not be observed. In Chapter 5, we introduce the tableplot as another tool to visualize data that can also show missing data patterns to help you think about these sorts of issues further93. If you delete observations and the missing data are not random/non-systematic, your scope of inference is restricted to just those subjects that provided responses and were analyzed. If the missingness is random and not related to aspects of the measurements taken, then some missingness can be tolerated and still retain some comfort that inferences can be extended to the population a random sample of subjects was taken from.

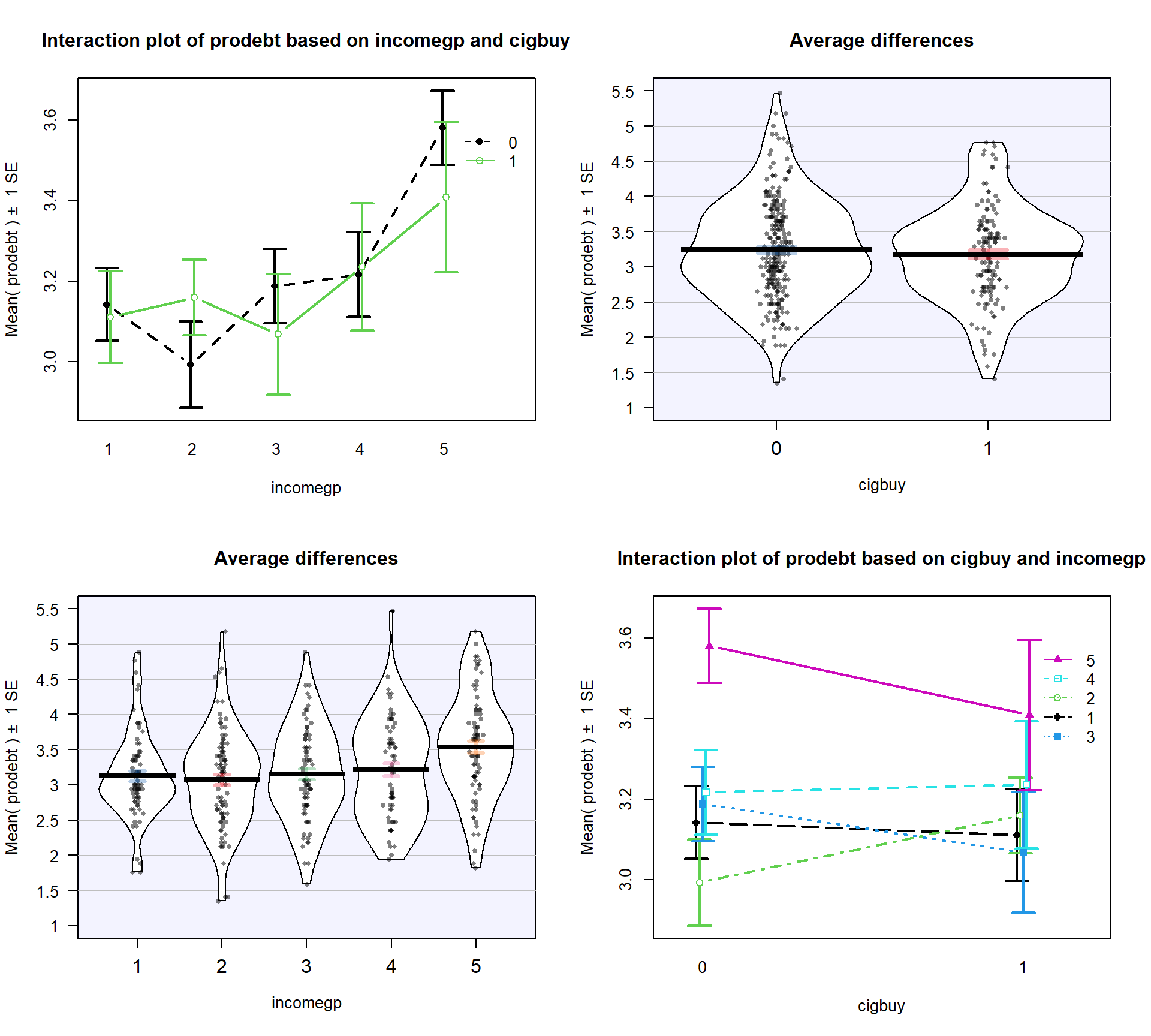

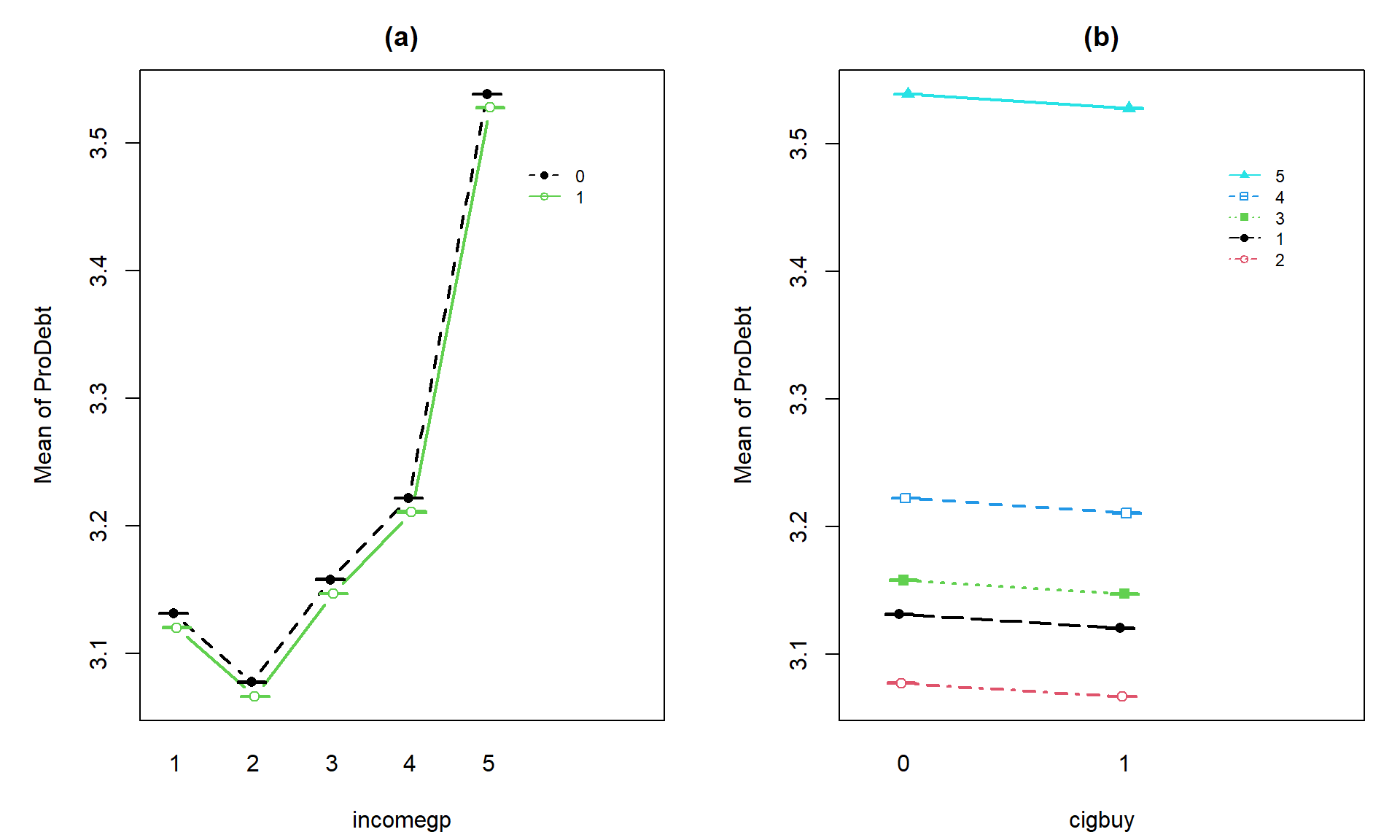

Ignoring this potential for bias in the

results for the moment, we are first interested in whether buying

cigarettes/not and income groups interact in their explanation of the

respondent’s mean opinions on being in debt. The interaction plot

(Figure 4.15) may suggest an interaction between cigbuy

and incomegp where the lines cross, switching which of the cigbuy levels is higher (income levels 2, 3, and 5) or even almost not different (income levels 1 and 4). But it is

not as clear as the previous examples, especially with how large the SEs are relative the variation in the means. The interaction \(F\)-test helps us

objectively assess evidence against the null hypothesis of no interaction. Based on the plot, there do

not appear to be differences based on cigarette purchasing but there might be some

differences between the income groups if we drop the interaction from the model. If we drop the interaction, then

this suggests that we might be in Scenario 2 or 3 where a single main effect of

interest is present.

intplotarray(prodebt ~ cigbuy * incomegp, data = debtRc, col = c(1,3,4,5,6), lwd = 2)

Figure 4.15: Interaction plot array of prodebt by income group (1 to 5) and whether they buy cigarettes (0 = no, 1 = yes).

As in other situations, and especially with observational studies where a single large sample is collected and then the levels of the factor variables are observed, it is important to check for balance – whether all the combinations of the two predictor variables are similarly represented. Even more critically, we need to check whether all the combinations of levels of factors are measured. If a combination is not measured, then we lose the ability to estimate the mean for that combination and the ability to test for an interaction. A solution to that problem would be to collapse the categories of one of the variables, changing the definitions of the levels but if you fail to obtain information for all combinations, you can’t work with the interaction model. In this situation, we barely have enough information to proceed (the smallest \(n_{jk}\) is 13 for income group 4 that buys cigarettes). We have a very unbalanced design with counts between 13 and 60 in the different combinations, so lose some resistance to violation of assumptions but can proceed to explore the model with a critical eye on how the diagnostic plots look.

tally(cigbuy ~ incomegp, data = debtRc)## incomegp

## cigbuy 1 2 3 4 5

## 0 36 49 54 53 60

## 1 37 45 20 13 21The test for the interaction is always how we start our modeling in Two-Way ANOVA situations. The ANOVA table suggests that there is little evidence against the null hypothesis of no interaction between the income level and buying cigarettes on the opinions of the respondents towards debt (\(F(4,378) = 0.686\), p-value = 0.6022), so we would conclude that there is likely not an interaction present here and we can drop the interaction from the model. This suggests that the initial assessment that the interaction wasn’t too prominent was correct. We should move to the additive model here but first need to check the assumptions to make sure we can trust this initial test.

library(car)

debt1 <- lm(prodebt ~ incomegp * cigbuy, data = debtRc)

Anova(debt1)## Anova Table (Type II tests)

##

## Response: prodebt

## Sum Sq Df F value Pr(>F)

## incomegp 10.742 4 5.5246 0.0002482

## cigbuy 0.010 1 0.0201 0.8874246

## incomegp:cigbuy 1.333 4 0.6857 0.6022065

## Residuals 183.746 378par(mfrow = c(2,2))

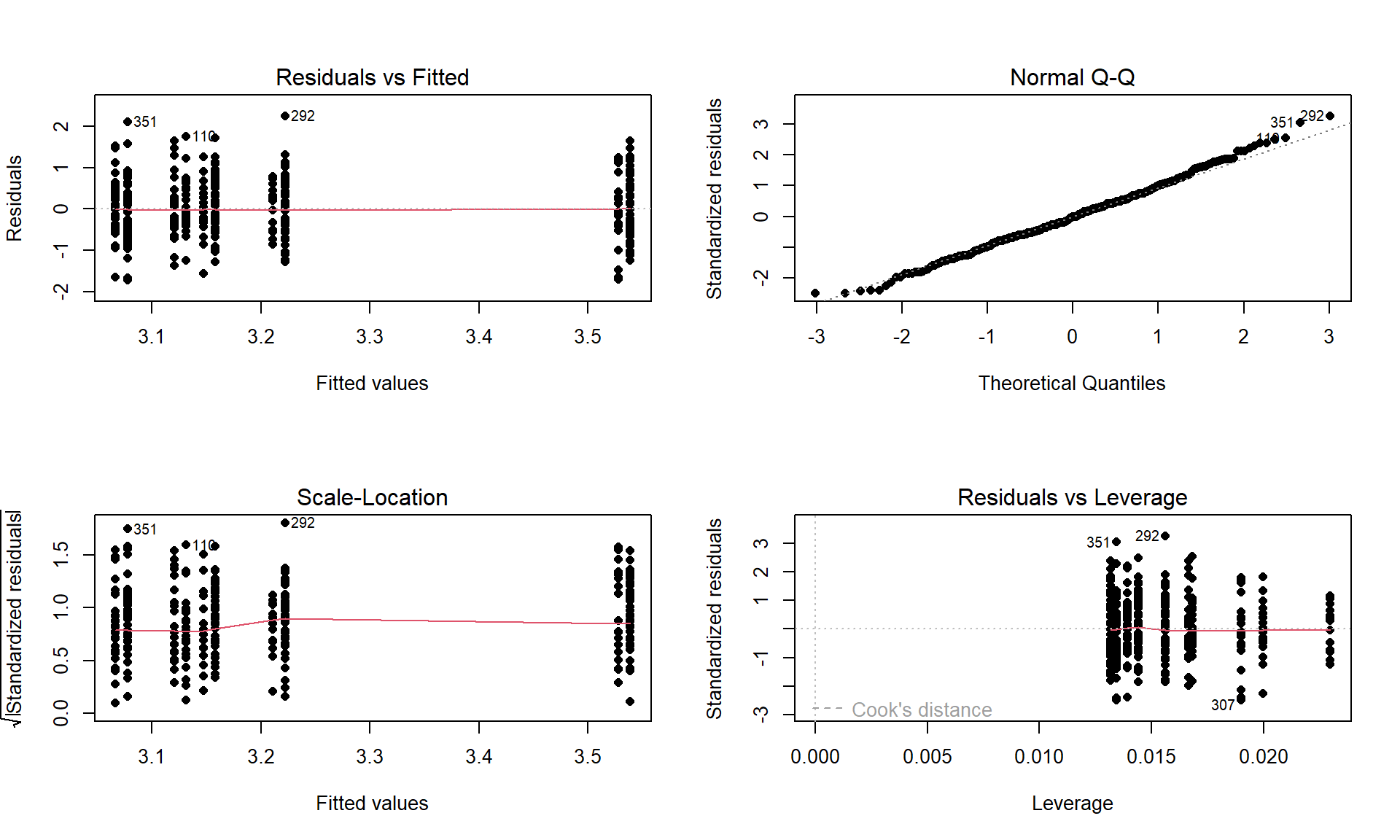

plot(debt1, pch = 16)

Figure 4.16: Diagnostic plot for prodebt by income group and buy cigarettes/not interaction model.

The diagnostic plots (Figure 4.16) seem to be pretty well-behaved with no apparent violations of the normality assumption and no clear evidence of a violation of the constant variance assumption. There is no indication of a problem with the independence assumption because there is no indication of structure to the measurements of the survey respondents that might create dependencies. In observational studies, violations of the independence assumption might come from repeated measures of the same person over time or multiple measurements within the same family/household or samples that are clustered geographically, none of which are part of the survey information we have. The random sampling from a population should allow inferences to a larger population except for that issue of removing partially missing responses so we can’t safely generalize results beyond the complete observations we are using without worry that the missing subjects are systematically different from those we are able to analyze. We also don’t have much information on the exact population sampled, so will just leave this vague here but know that there would be a population these conclusions apply since it was random sample (at least those that would answer the questions). All of this suggests proceeding to fitting and exploring the additive model is reasonable here. No causal inferences are possible because this is an observational study.

After ruling out the interaction of income and cigarette status on opinions about debt, we can focus on the additive model.

Hypotheses (Two sets apply when the additive model is the focus!):

\(H_0\): No difference in means for

prodebtfor income groups in population, given cigarette buying in model\(\Leftrightarrow\) All \(\tau_j\text{'s} = 0\) in additive model.

\(H_A\): Some difference in means for

prodebtfor income group in population, given cigarette buying in model\(\Leftrightarrow\) Not all \(\tau_j\text{'s} = 0\) in additive model.

\(H_0\): No difference in means for

prodebtfor cigarette buying/not in population, given income group in model\(\Leftrightarrow\) All \(\gamma_k\text{'s} = 0\) in additive model.

\(H_A\): Some difference in means for

prodebtfor cigarette buying/not in population, given income group in model\(\Leftrightarrow\) Not all \(\gamma_k\text{'s} = 0\) in additive model.

Validity conditions – discussed above but with new plots for the additive model:

debt1r <- lm(prodebt ~ incomegp + cigbuy, data = debtRc) par(mfrow = c(2,2)) plot(debt1r, pch = 16)

Figure 4.17: Diagnostic plot of additive model for

prodebtby income group and whether they buy cigarettes/not.Constant Variance:

In the Residuals vs Fitted and the Scale-Location plots in Figure 4.17, the differences in variability among groups is minor and nothing suggests a violation. If you change models, you should always revisit the diagnostic plots to make sure you didn’t create problems that were not present in more complicated models.

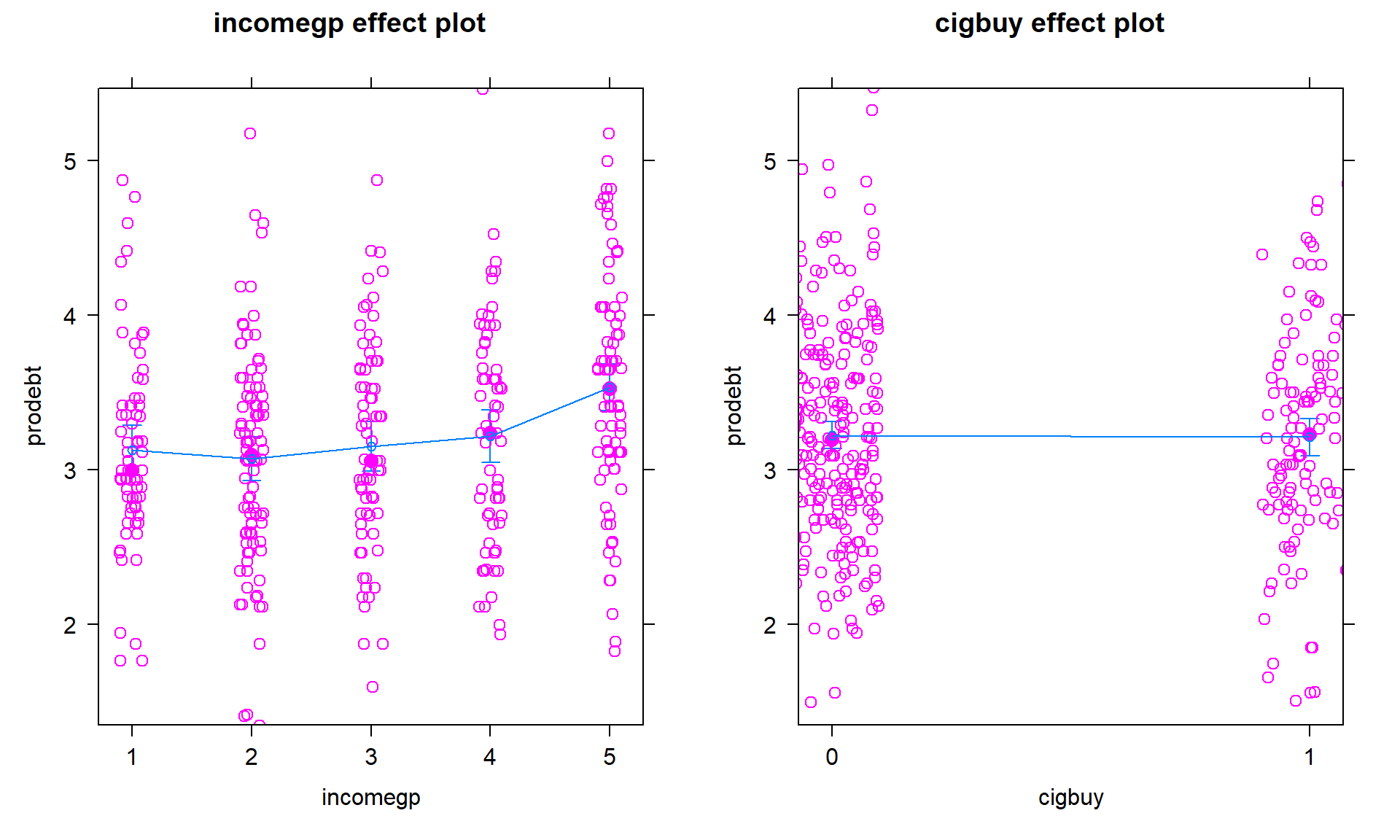

We can also explore the partial residuals here as provided in Figure 4.18. The variability in the partial residuals appears to be similar across the different levels of each predictor, controlled for the other variable, and so does suggest any issues that were missed by just looking at the overall residuals versus fitted values in our regular diagnostic plots. Note how hard it is to see differences in the mean for levels of

cigbuyin this plot relative to the variability in the partial residuals but that the differences in the means inincomegpare at least somewhat obvious.

plot(allEffects(debt1r, residuals = T))

Figure 4.18: Term-plot for additive model for

prodebtby income group and whether they buy cigarettes/not with partial residuals.Normality of residuals:

- The QQ-Plot in Figure 4.17 does not suggest a problem with this assumption.

Calculate the test statistics and p-values for the two main effect tests.

Anova(debt1r)## Anova Table (Type II tests) ## ## Response: prodebt ## Sum Sq Df F value Pr(>F) ## incomegp 10.742 4 5.5428 0.0002399 ## cigbuy 0.010 1 0.0201 0.8872394 ## Residuals 185.079 382- The test statistics are \(F(4,382) = 5.54\) and \(F(1,382) = 0.0201\) with p-values of 0.00024 and 0.887.

Conclusions (including for the initial work with the interaction test):

- There was initially little to no evidence against the null hypothesis of no interaction between income group and cigarette buying on pro-debt feelings (\(F(4,378) = 0.686\), p-value = \(0.6022\)) so we would conclude that there is likely not an interaction in the population and the interaction was dropped from the model. There is strong evidence against the null hypothesis of no difference in the mean pro-debt feelings in the population across the income groups, after adjusting for cigarette buying (\(F(4,382) = 5.54\), p-value = \(0.00024\)), so we would conclude that there is some difference in them. There is little evidence against the null hypothesis of no difference in the mean pro-debt feelings in the population based on cigarette buying/not, after adjusting for income group (\(F(1,382) = 0.0201\), p-value = \(0.887\)), so we would conclude that there is probably not a difference across cigarette buying/not and could consider dropping this term from the model.

So we learned that the additive model was more appropriate for these responses and that the results resemble Scenario 2 or 3 with only one main effect being important. In the additive model, the coefficients can be interpreted as shifts from the baseline after controlling for the other variable in the model.

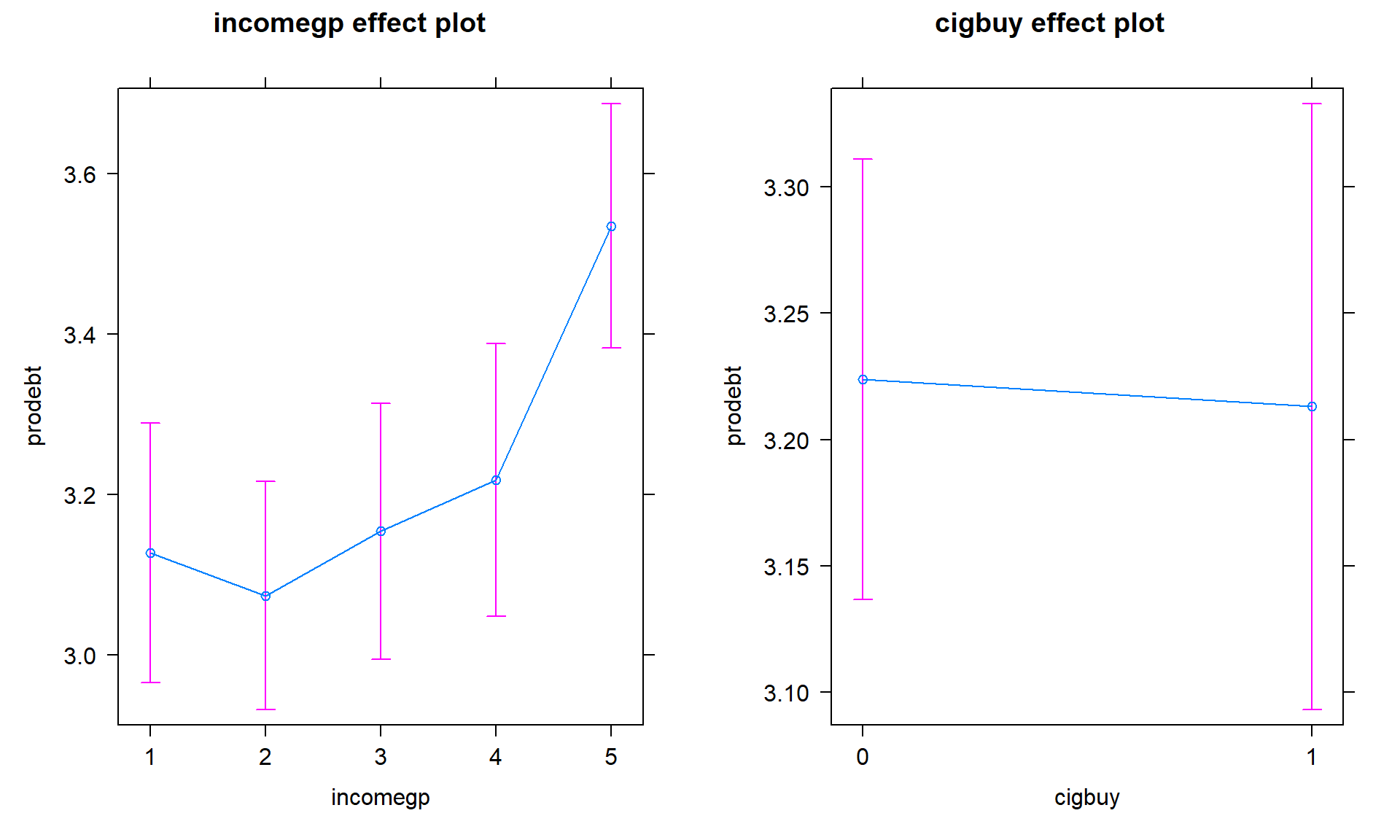

Size:

- Figure 4.19 shows the increasing average comfort with being in

debt as the income groups go up except between groups 1 and 2 where 1 is a little

higher than two. Being a cigarette buyer was related to a lower comfort level

with debt but is really no different from those that did not report buying

cigarettes. It would be possible to consider follow-up tests akin to the Tukey’s

HSD comparisons for the levels of

incomegphere but that is a bit beyond the scope of this course – focus on the estimated mean for the 5th income group being over 3.5 and none of the others over 3.2. That seems like an interesting although modest difference in mean responses across income groups after controlling for cigarette purchasing or not.

plot(allEffects(debt1r))

Figure 4.19: Term-plots for the